Fast and reliable

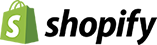

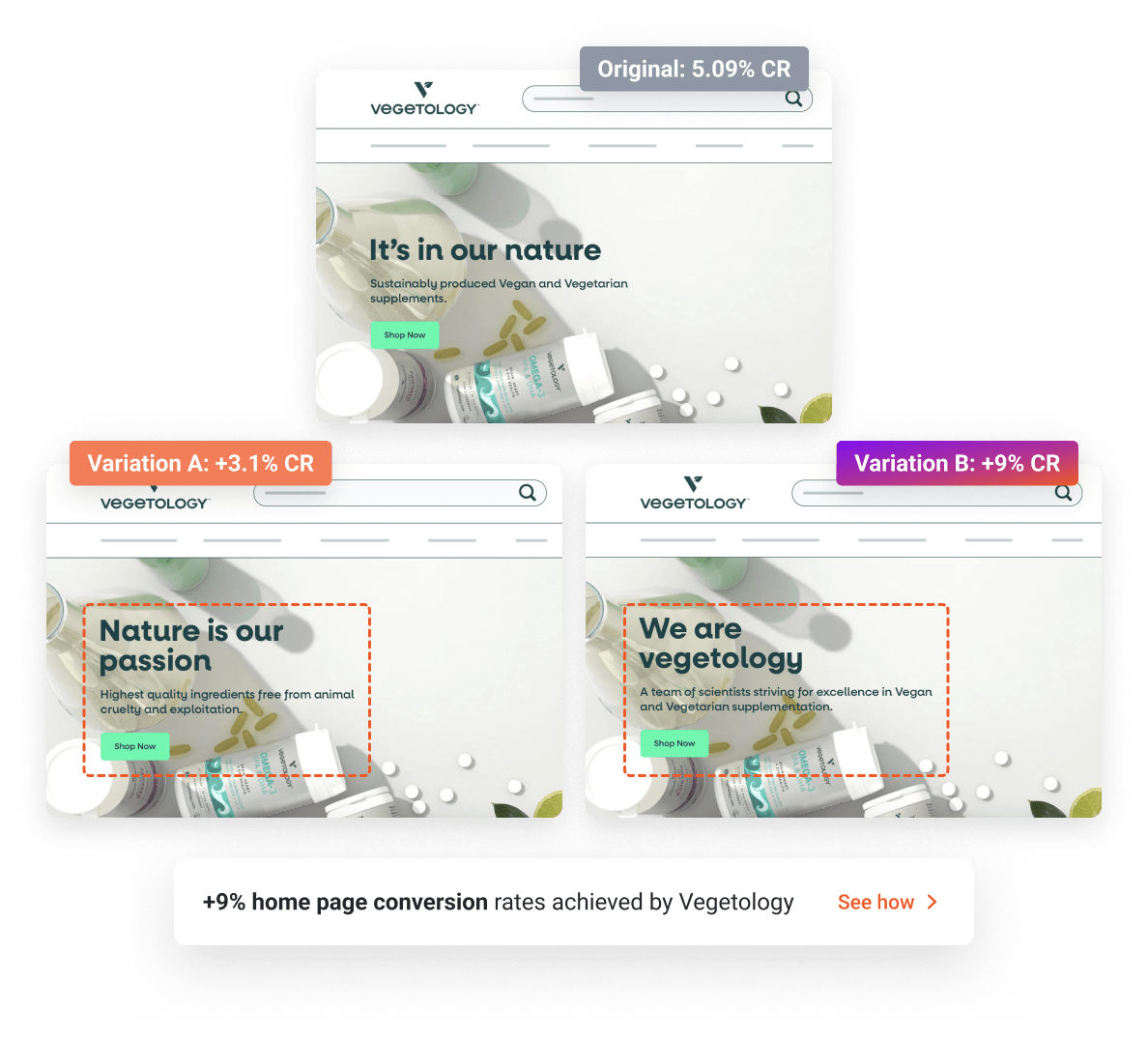

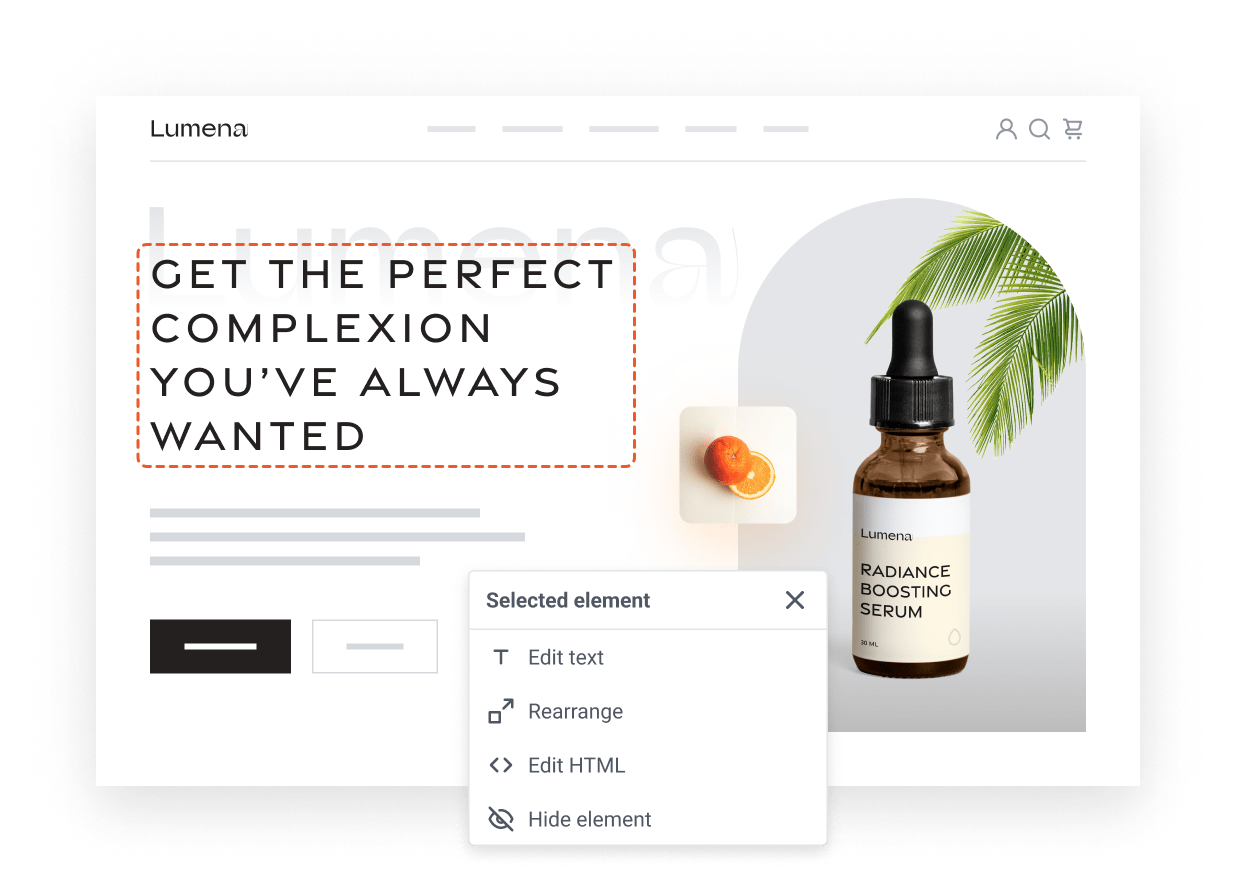

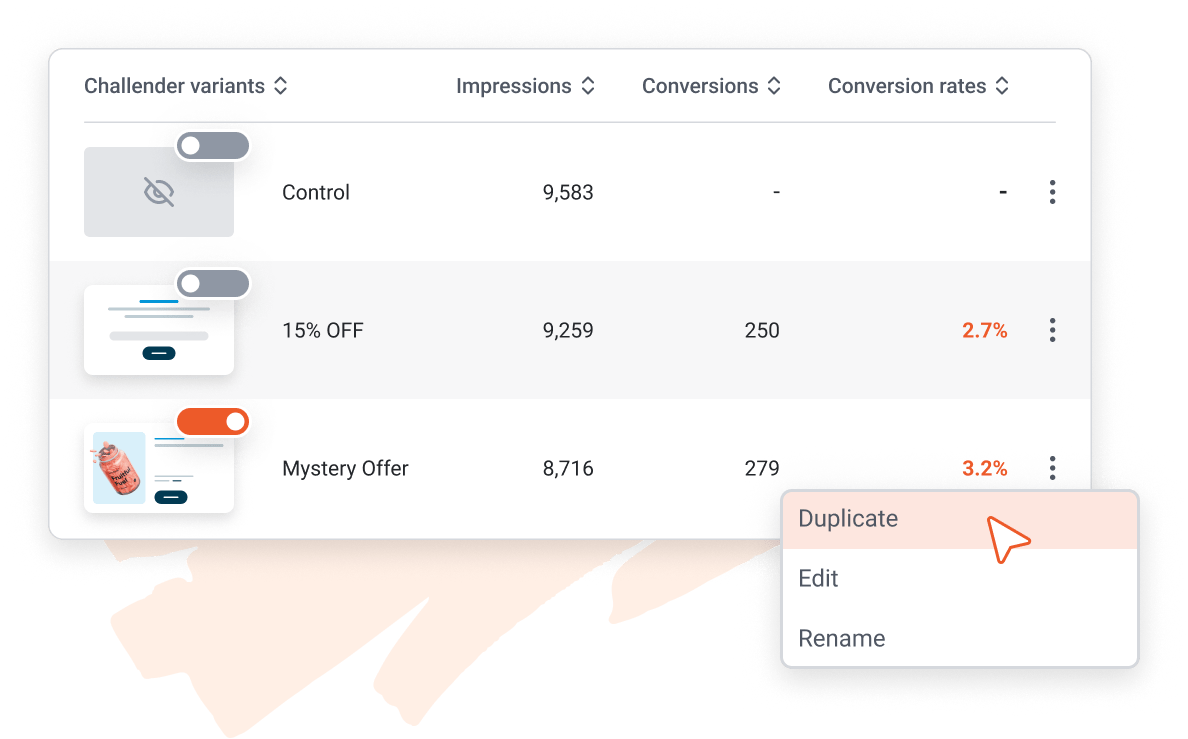

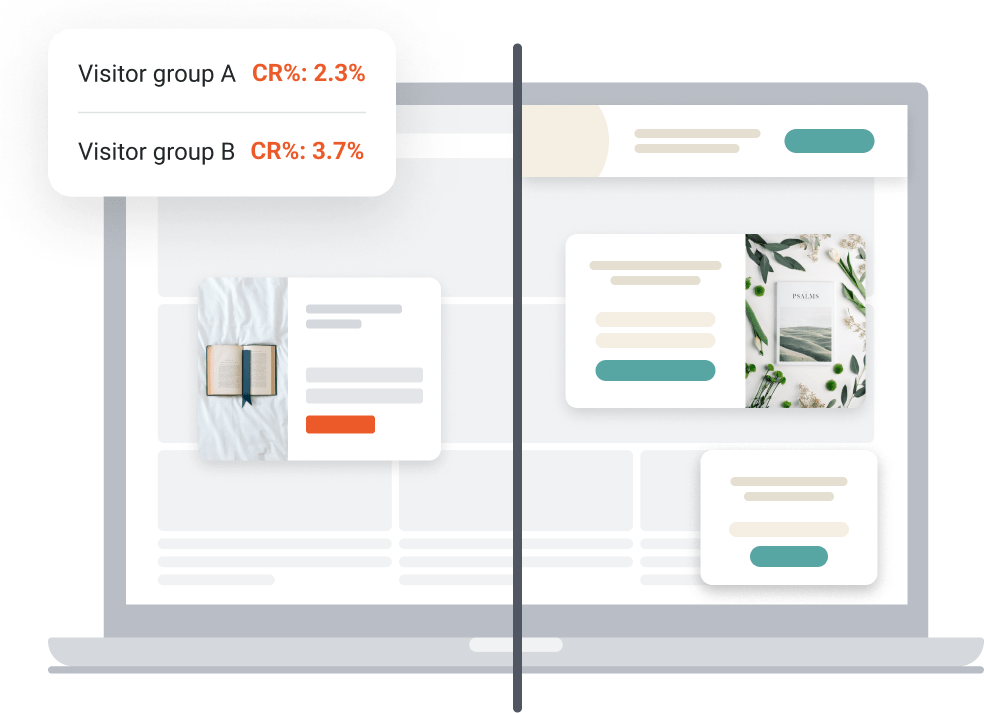

A/B testing for ecommerce sites

Improve conversion rates by testing landing pages, popups or complete multi-step journeys against each other. Now with AI.✨

FREE PLAN AVAILABLE. NO CREDIT CARD NEEDED.

450+ reviews on

ratings on Shopify

ratings on Shopify