What’s the ultimate goal when you’re creating your webpages, popups, email campaigns, and ads? To get people to engage and take action.

But figuring out the best way to get them to do that isn’t quite so simple. Even when you make decisions based on past events, there’s still a risk of falling prey to the gambler’s fallacy—a false belief that past events will influence future events.

Nobel laureate Daniel Kahneman’s theory probably says it best: intuitive thinking is faster than a rational approach but more prone to error.

Enter A/B tests, an experiment-driven method of making better marketing decisions.

This article will walk you through everything you need to know about A/B testing, a simple strategy that helped Obama raise an extra $60 million in donations for his nomination campaign. You’ll see exactly how businesses use A/B tests to ace their conversion goals and pick up actionable tips your brand can use for similar results.

Let’s get started!

What is A/B testing?

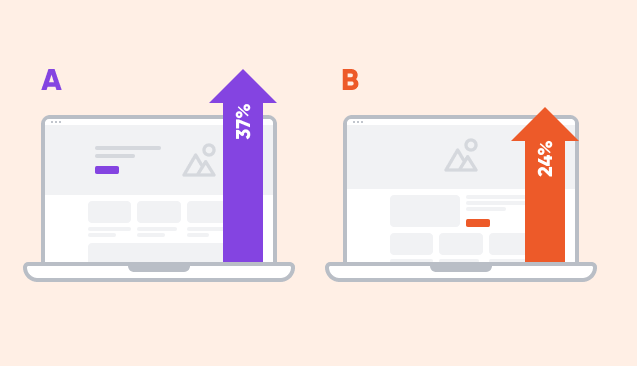

A/B testing (or split testing) is a method used to compare the performance of two different versions of a variable (i.e. a web page).

You show the two versions to different segments of website visitors and then measure which variant results in a higher conversion rate.

This version is called “the winning variant,” and becomes the basis for future tests intended to drive more conversions.

Let’s say a company wants to test variations of a landing page. They have two versions:

- Version A features a red button

- Version B features a blue button

In this case, they show version A to half of their website visitors and version B to the other half.

Then, they collect data on which of the two versions have a better conversion rate. Once, they achieve a statistically significant result, they activate the better-performing variant. But A/B testing shouldn’t end here. They should continue running further A/B tests to improve this winning variant even more.

This was just one example, but A/B tests are not limited to web pages.

You can also use this methodology to test different versions of a blog post, a sign-up form, an email, or an ad copy. In fact, in Databox’s survey, over 57% of the companies confirmed that they A/B test their Facebook ad campaigns every time.

By conducting A/B tests, you can stop relying on intuition and instead base your decisions on reliable data, which can skyrocket conversion rates in unimaginable ways. And while conversion rate optimization is often the desired outcome, there are several other positive results you can expect.

Let’s consider a few reasons why split testing should be part of your marketing strategy, regardless of your budget or industry.

Why should you run A/B tests?

The fact that A/B testing is the second most popular CRO method shows just how much good it can do.

Here are just some of the benefits you’ll see if you do A/B testing:

1. A better understanding of your website visitors

Running A/B tests helps you gain a deeper understanding of what your target audience wants through their behavior on your website. This knowledge will help you optimize your future marketing campaigns.

By split-testing different elements on your web page, you can also determine which design, copy, and layout elements work best for your web traffic.

2. Data-backed decisions you can feel confident about

Relying on gut feelings might be a risk worth taking when deciding between pizza flavors to try out… but it’s most certainly NOT the best approach when you’re deciding how best to invest a tight marketing budget!

With A/B testing, you can make data-driven decisions based on customer behavior, which is just all-around smart.

3. Improved conversion rates

One of the most compelling reasons to conduct a split test is the potential for significant improvements in conversion rates.

A prime example of this is the case of Obvi. They increased conversion rates on a Black Friday popup by 36% in just one week! Pretty incredible that making a simple tweak boosted conversions by that much, right?

By determining statistical significance and analyzing test results, you can make informed decisions on marketing strategies and optimize your landing pages for more conversions.

4. A higher ROI

When you A/B test your campaigns, you’ll speed up the process of discovering what works best for your audience.

Rather than revamping an entire marketing campaign, you may find that you can make one or two small tweaks that will make a huge difference.

You’ll be able to test your hypotheses and prove (or disprove) them, so every change you make is bringing your campaign in the right direction.

As a result, you’ll save time and money, increasing the ROI of your campaigns.

What should you A/B test on your landing page?

Convinced that A/B testing is worth your while? Great. Now it’s time to take a look at exactly which particular elements you should be split testing.

Here are a few examples of variables you should test on your landing pages.

1. Main headline and subheadline

Creating and testing different variations of headlines and subheadlines for a page is a great place to start.

These two elements are found above the fold, meaning they’re almost always what people see first. They can mean the difference between “hooking” your visitors and losing them.

Experiment with different wording, lengths, and tones to see which combination resonates most with your target audience.

Consider incorporating elements like urgency, curiosity, or solution-oriented language to capture attention and compel further exploration.

2. Value proposition

Your value proposition should clearly articulate the unique benefits or solutions your product or service offers to customers.

A/B testing can help refine this messaging by comparing variations in wording, emphasis, and presentation style.

Pay attention to how different value propositions address customer pain points, highlight key features, and differentiate your offering from competitors.

3. Calls-to-action (CTA)

CTAs play a crucial role in guiding visitors towards desired actions, whether it’s making a purchase, signing up for a newsletter, or downloading a resource.

Test different aspects of your CTAs, including text, color, size, and placement, to optimize their effectiveness.

Additionally, consider experimenting with contrasting or complementary colors to make CTAs stand out and entice clicks.

4. Signup forms

One element you can A/B test with signup forms is their length. You may want to use a longer form to get more comprehensive information from your visitors, but your users may prefer a shorter, simpler form.

By conducting a split test, you can determine which length gets you more form submissions and make adjustments accordingly.

Consider testing the style of your forms, too. For instance, you might try a minimalist design against a more complex one.

5. Images

A/B testing images is crucial for determining which visual elements are most effective at capturing the attention of potential customers and driving conversions.

You can compare different product images to see what works best in terms of angles, lighting, and styling. A/B testing will reveal which images most effectively showcase the product and entice customers to make a purchase.

A/B test variations in image size, placement, and format to optimize loading times and visual hierarchy for maximum impact.

6. Page structure

The layout and organization of your landing page can significantly influence user engagement and conversion rates.

You might A/B test the placement of your call-to-action button to see if moving it from the top of the page to the middle of the page increases conversions. You could test a sticky navigation bar against a standard fixed navbar, or see if featuring your social proof directly under the hero section keeps people scrolling down the page.

Since page structure is such a broad area, remember to test only one thing at a time through A/B testing!

7. Product recommendations

Effective product recommendations can help guide visitors towards relevant offerings and increase overall conversion rates.

Split test different formats for displaying recommendations, including grids, carousels, and lists, to identify the most visually appealing and user-friendly presentation.

Experiment with personalized recommendations based on user behavior, demographics, or past interactions to enhance relevance and engagement.

8. Offers

Promotional offers can be powerful incentives for driving conversions, but their effectiveness depends on factors like relevance, exclusivity, and perceived value.

A/B test different types of offers, such as discounts, freebies, or limited-time deals, to determine which resonates most with your audience.

A clothing brand may choose to test these two different offers: “20% off your first purchase” vs. “Free shipping on your first order.”

You can also A/B test different elements of an offer, such as its language, placement, and design.

Boosting the sense of urgency by including wording like “limited time” might increase conversions, or simply using a different color scheme might make it more eye-catching. The only way to know for sure? Test!

What are some alternative testing methods?

While A/B testing is a widely used and effective method for optimizing digital experiences, there are other testing techniques available that can offer deeper insights and greater flexibility in experimentation.

Let’s explore three alternative testing methods:

1. Split URL testing

Split URL testing, also known as split domain testing, involves directing users to different URLs or pages based on predefined variations.

Unlike traditional A/B testing, which presents variations within the same web page, split URL testing allows for more extensive changes, such as modifications to site structure, navigation, or design elements.

How it works:

- Create multiple versions of the landing page or site component you want to test.

- Assign each version a unique URL or domain.

- Use a testing platform or web server configuration to randomly distribute incoming traffic to each URL.

- Measure and analyze user interactions and conversion rates for each variation using an analytics tool (e.g. Google Analytics).

2. Multivariate testing

Multivariate testing allows for simultaneous testing of multiple variables within a single web page or experience.

The multivariate test method is ideal for understanding how different combinations of elements interact and impact user behavior. Rather than testing entire page variations, multivariate testing focuses on specific elements, such as headlines, images, CTAs, or form fields, to identify the most effective combinations.

How it works:

- Identify the elements within a web page that you want to test (e.g. headline, image, CTA).

- Create different variations for each element, testing different options for each.

- Use a testing platform to dynamically generate combinations of these elements.

- Monitor user interactions and conversion rates to determine which element combinations yield the best results.

3. Multi-page testing

Multi-page testing, also known as funnel testing or sequential testing, involves testing variations across multiple pages or steps within a user journey.

This method is particularly useful for optimizing complex conversion paths, such as checkout flows, signup processes, or user onboarding experiences.

By analyzing how changes to individual pages impact overall conversion rates, businesses can identify friction points and optimize the entire user journey.

How it works:

- Map out the sequential steps of the user journey you want to test (e.g. landing page, product page, checkout).

- Create variations for each page within the user journey, testing different layouts, content, or functionality.

- Use a testing platform to direct users through different versions of the user journey.

- Analyze user behavior and conversion rates at each step to identify areas for improvement and optimization.

How to choose the right testing method?

Each testing method offers unique advantages and challenges, and the choice of method depends on factors such as the complexity of the test, the level of granularity desired, and the resources available.

While A/B testing remains a foundational technique for optimization, experimenting with alternative methods like split URL testing, multivariate testing, and multi-page testing can provide deeper insights and drive more significant improvements in user experience and conversion rates.

A step-by-step guide to conducting A/B testing

If you’re worried that A/B testing is too difficult, too much work, or too complex, stay tuned. When you run your A/B testing according to this guide, you’ll be among the 63% of companies who agree that A/B testing is effortless.

Step 1: Analyze your website

You’ll want to start by studying your site’s current state, including its overall design and layout, user flow, and the performance of its existing elements (buttons, forms, and calls-to-action, etc.).

Your website’s performance data, such as traffic and conversion metrics, also gives you insight into underperforming areas so you can prioritize them for testing.

For example, if you discover that a high percentage of visitors leave after viewing only one page, that could indicate that your website’s navigation is not optimal. An improved UX design can boost conversion by up to 400%, but it all starts with keeping visitors engaged and on the site longer.

Google Analytics is a helpful tool for measuring goals. Here are some reports you can check out:

- New vs returning visitors

- Visitors using mobile devices vs desktops

- Source/medium and campaigns

- Landing pages

- Keywords

- E-commerce overview

- Shopping behavior

Step 2: Brainstorm ideas and formulate hypotheses

This step involves generating a list of potential changes you want to test and forming a hypothesis about how each of these changes will affect the desired outcome.

For example, if the goal is to increase website conversions, one idea may be to change the color of the “Shop Now” button from red to green. The corresponding hypothesis would be that the change in color will lead to an increase in conversions.

It helps to narrow the focus of the split testing and guides the next stages in the process.

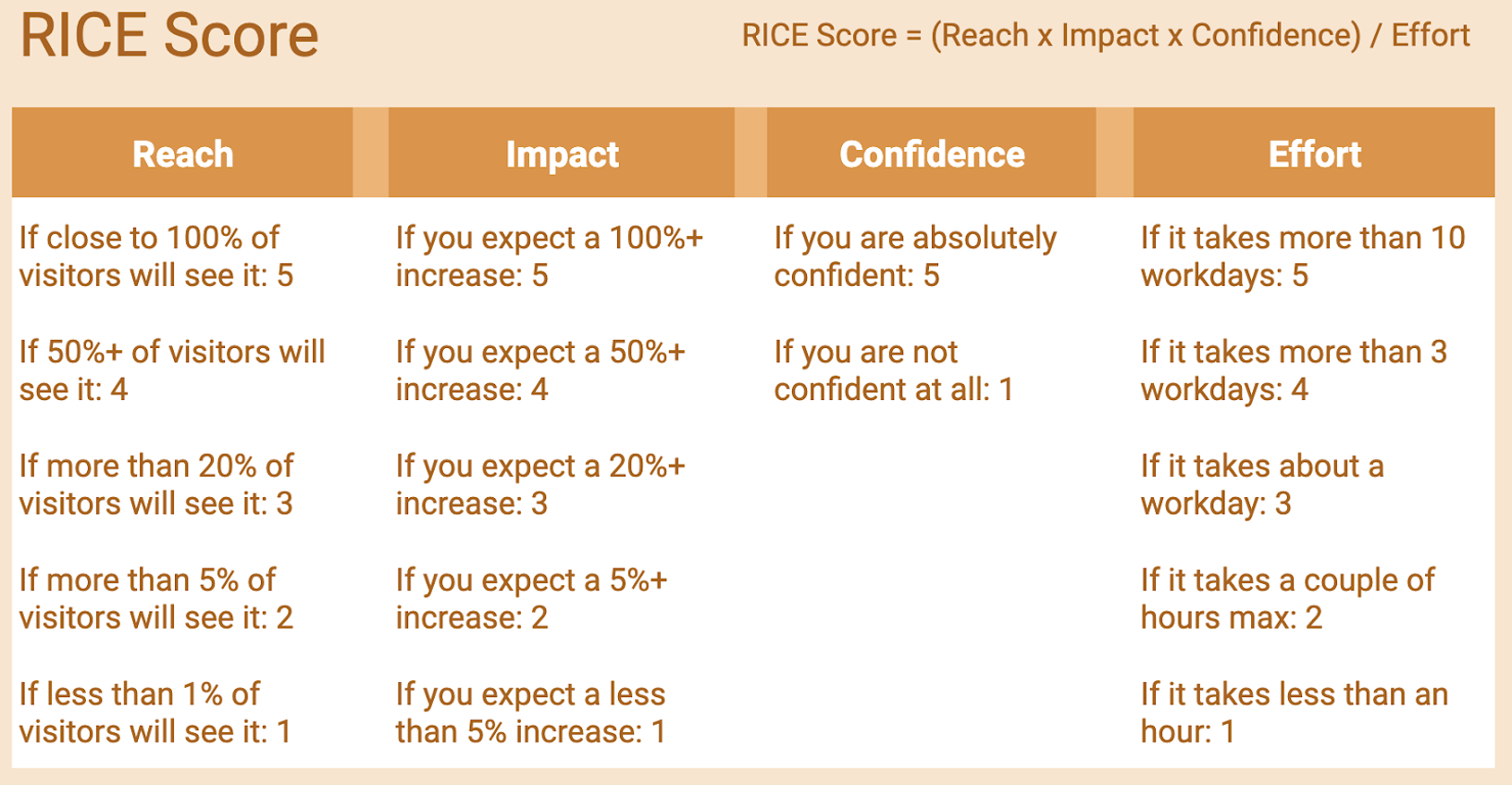

Step 3: Prioritize ideas

Prioritizing ideas allows you to hone in on the most promising hypotheses and test them first.

One effective approach for this is to use the RICE method, which combines four factors (reach, impact, confidence, and effort) to give each idea a score.

Here’s a breakdown of the acronym:

- Reach: The number of users or visitors the change will affect.

- Impact: The potential effect of the change on key metrics.

- Confidence: How confident are you that the change will have the desired effect?

- Effort: This refers to the resources required to implement the change.

Considering all four factors helps maximize the return on your testing efforts.

Step 4: Create challenger variants

Next, it’s time to create alternative versions of the website element to test against the original, or “control” version.

For example, if you’re testing the effectiveness of the call-to-action button on your website, the challenger variant of the button might be different in color or size, or it may have different copy.

Creating and testing multiple challenger variants to find the best solution can also be effective. In the call-to-action button example above, you could create three different variants (one with a different color, one with a different size, and one with different copy) and test them all against the control button to see which performs best.

Step 5: Run test

This is the phase where you execute the experiment and collect results. Run the test long enough to gather sufficient data to make informed decisions about the versions being tested.

Your average daily and monthly visitors are vital factors here. If your website sees a high volume of daily visitors, you can probably run the test for a short period. In contrast, you’ll need to run the test longer if you have a lower volume of visitors so that you can gather enough data.

The number of variants you’re testing may also impact the duration of the test. The more variants you have, the more time you’ll need to gather data on each one.

Step 6: Evaluate test results and optimize

The final step for conducting A/B testing is to evaluate the results and optimize them. Here, you analyze the data collected during the test to determine which variant performed better. You can do this by comparing metrics such as conversion rate, bounce rate, and click-through rate between the control version and the challenger version.

If the results show that one variant performed significantly better than the other, this version becomes the winner. You can then optimize the campaign using the winning variant to improve performance.

However, if the results are inconclusive or do not support the initial hypothesis, further optimization is necessary. This usually involves implementing new ideas or conducting additional tests to better understand the results.

For example, if you run the test on an email campaign and the results show no significant difference in open rates, optimize the campaign by testing new subject lines or changing the email design.

Watch this video to learn more about A/B testing and see a step-by-step guide on how to run A/B tests with OptiMonk:

4 real-life A/B testing examples

Ok, we’re done singing the praises of A/B tests and their magic! Take a look at a few real-world examples of top brands that have used split tests:

1. A/B test the design of your messages

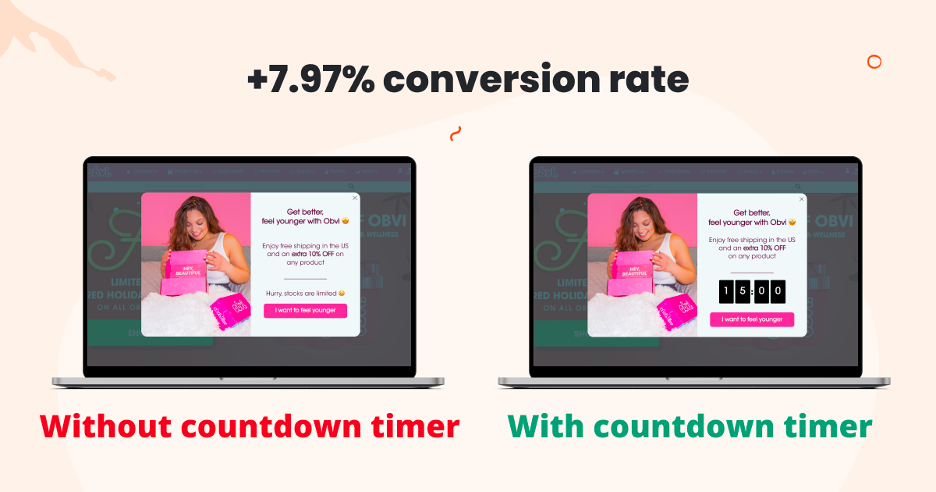

In this example, DTC brand Obvi wanted to see if their hypothesis that adding a countdown timer to their discount popup would increase the sense of urgency and result in higher conversion and coupon redemption rates.

They created two variations of the popup, one with a timer and the other without, and tested them with a sample size of their target audience. They were right!

The variant with a countdown timer converted 7.97% better than the one without, indicating that the timer was effective at increasing urgency and conversions.

2. A/B test the effectiveness of teasers

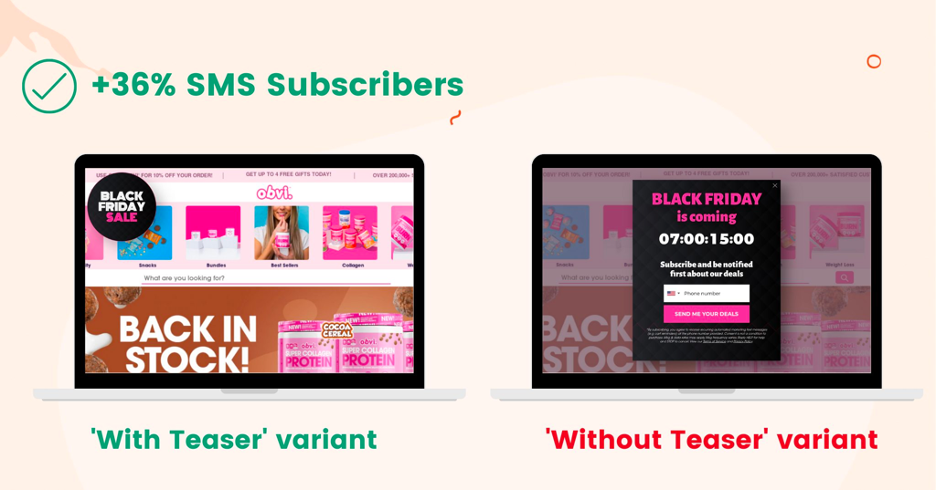

In this second example from Obvi, they tested two versions of their Black Friday popup: one with a teaser (a small preview of the popup) and one without.

The variant with the teaser resulted in 36% more SMS subscribers and a higher conversion rate for the campaign. So they learned that including a teaser in their popup was an effective strategy for increasing engagement and driving more sales.

3. A/B test different types of campaigns

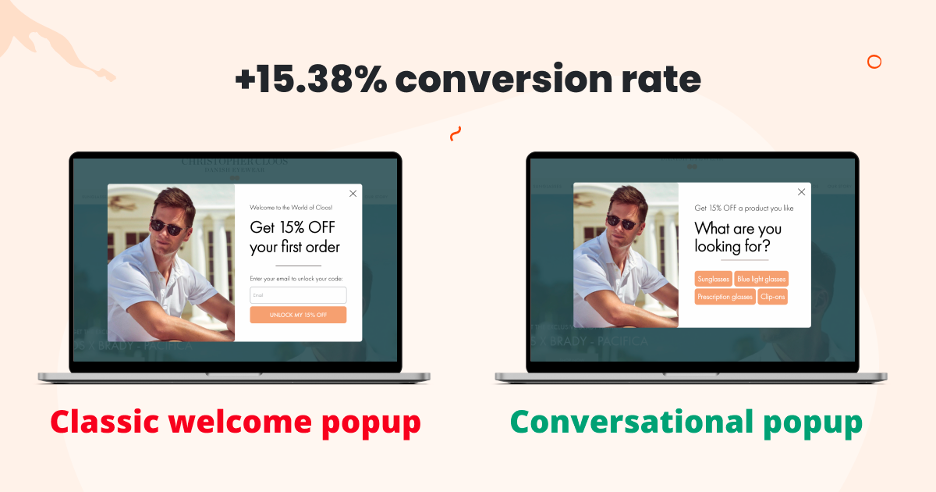

A/B testing different types of campaigns, like in the example below from the team at Christopher Cloos, is a way to discover which version resonates better with your visitors.

In this case, the team tested a classic welcome popup against a more personalized conversational popup and found that the conversational popup converted at a higher rate (15.38% higher, to be exact).

This test was run for a duration of one month, which was ideal based on the store’s website traffic. If they’d run the test for a shorter period, it may not have given the conversational popup a chance to fully perform.

Also, note that longer-duration tests can be impacted by external factors such as seasonality, trends, or changes in consumer behavior, which could influence results.

4. A/B test the headline on your home page

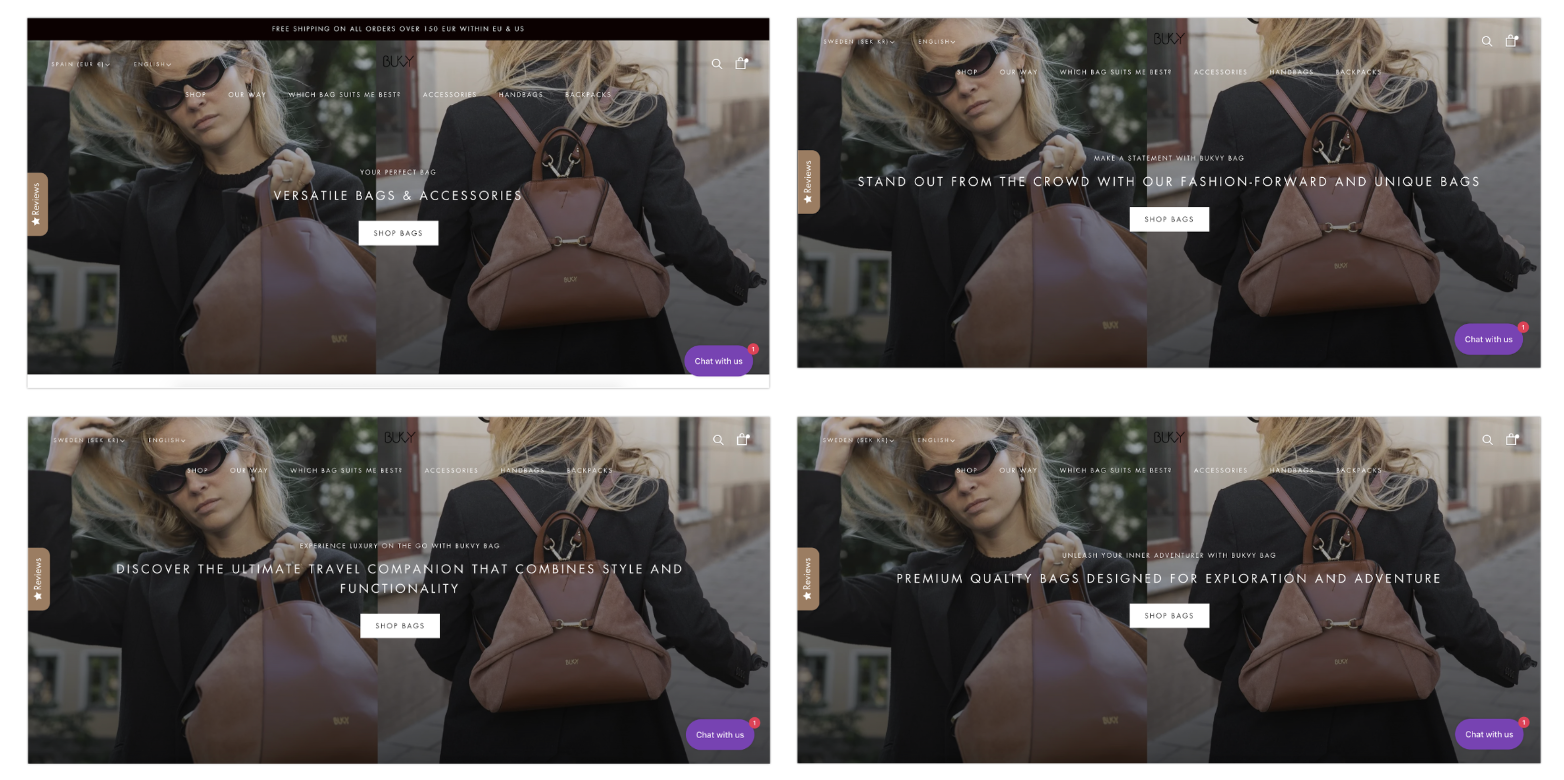

Bukvybag, a retailer specializing in versatile bags and accessories, recognized the critical role that the home page headline plays in capturing visitors’ attention and conveying the brand’s value proposition effectively. So they decided to run an A/B test on their home page headline.

They tested multiple headline variations, each highlighting a different aspect of their value proposition:

- Original: Versatile bags & accessories

- Variant A: Stand out from the crowd with our fashion-forward and unique bags

- Variant B: Discover the ultimate travel companion that combines style and functionality

- Variant C: Premium quality bags designed for exploration and adventure

The test results showed that it was a good decision as they achieved a 45% upswing in orders thanks to this A/B test.

Recommended reading: 7 Easy-to-Implement A/B Testing Ideas to Grow Your Revenue & Increase Landing Page Conversion Rates

3 A/B testing mistakes to avoid

The last thing you want is to dedicate all that effort and marketing budget into split testing, only to get a false positive or an inaccurate test result. Here’s how to avoid the most common (and costly!) mistakes:

Mistake 1: Changing more than one element

When conducting an A/B test, you should only change one element at a time so that you can accurately determine the impact of that specific change.

Are you testing the effect of changing a button color? Then change only the color of the button in the challenger variant and nothing else. If you also change the text on the button or the layout of the page, you’ll find it difficult to determine which change had the greatest impact on the results.

Changing multiple elements at once can also lead to inaccurate results as the changes may interact with one another in unexpected ways.

Mistake 2: Ignoring the statistical significance

In A/B testing, it’s possible that the results of a test come from chance rather than a true difference in the effectiveness of the variants. This can lead to false conclusions about which variant is better, resulting in poor decisions based on inaccurate data.

Here’s an example: your test shows that variation A has a slightly higher conversion rate than variation B, but you don’t take into account how significant the results are. So you end up concluding that variation A is the better option. However, considering the statistical significance would have made it clear there wasn’t enough evidence to conclude that variant A was indeed better.

Ignoring statistical significance in A/B testing leads to a false sense of confidence in the results, causing you to implement changes that may not have any real impact on performance.

Mistake 3: Not running tests for long enough

This next mistake goes hand in hand with mistake #2: ending a split test before it has had enough time to collect sufficient data to produce a statistically significant. You’ll end up with inaccurate conclusions about the element you’re testing.

Imagine an A/B test runs for only a week and you declare a particular variant the winner. In reality, the results were only due to chance. Make sure you’re running tests long enough to accurately capture the differences between the versions.

FAQ

How to achieve statistically significant results?

Achieving statistically significant results in A/B testing requires careful planning, execution, and analysis. To ensure statistical significance, you need to:

- Determine a sufficient sample size: Calculate the minimum number of visitors or participants needed for each variation to detect meaningful differences in conversion rates.

- Set a confidence level and statistical power: Choose appropriate thresholds for confidence level (typically 95% or higher) and statistical power (often 80% or higher) to minimize the risk of Type I and Type II errors.

- Run tests for an adequate duration: Allow tests to run long enough to capture variations in user behavior across different time periods and account for any external factors that may influence results.

- Monitor and analyze results: Use statistical methods such as hypothesis testing and confidence intervals to evaluate the significance of observed differences between variations and make informed decisions based on the data.

By following these principles and employing robust testing methodologies, you can achieve statistically significant results that provide reliable insights for optimizing your digital experiences.

What is the best A/B testing tool for landing pages?

OptiMonk is an all-in-one conversion rate optimization tool that’s widely regarded as one of the best A/B testing tools for landing pages. It offers a comprehensive suite of features designed to streamline the A/B testing process and maximize conversions.

With its intuitive interface, powerful analytics, and advanced targeting capabilities, OptiMonk empowers businesses to create and test multiple variations of their landing pages with ease. Whether you’re testing headlines or CTAs, OptiMonk provides the tools and insights you need to make data-driven decisions and achieve your conversion goals.

What are the challenges of split testing?

Split testing, also known as A/B testing, comes with its own set of challenges that businesses may encounter:

- Sample size limitations: Obtaining a large enough sample size to achieve statistically significant results can be challenging, particularly for websites with low traffic volume.

- Test duration: Running tests for an adequate duration to capture variations in user behavior and account for external factors can require patience and careful planning.

- Validity of results: Ensuring that test variations are implemented correctly and that results accurately reflect user preferences and behavior is essential for drawing reliable conclusions.

- Resource constraints: Conducting split tests may require significant time, effort, and resources, particularly for businesses with limited personnel or budget.

- Interpretation of results: Analyzing test results and determining the significance of observed differences between variations can be complex, requiring a solid understanding of statistical principles and methodologies.

Wrapping up

Hopefully, this article has shown you just how critical A/B testing can be for optimizing your online store. Once you understand all the different ways A/B testing can help you improve, it’s hard to believe that only 44% of companies use split testing software!

If your business is not currently running A/B tests, it’s not too late to give your conversion rate the TLC it deserves. By split-testing different variants, you can identify which elements of your existing web pages or marketing campaigns are working (or not) and make strategic changes in line with your goals.

Remember that it’s as easy as creating different versions and comparing the results to determine the best-performing version. Whether you’re a small business owner or a marketing professional, A/B testing is an essential tool to have in your arsenal!