- Blog

- A/B Testing Basics: A Beginner’s Guide to Optimizing Your Website

A/B Testing Basics: A Beginner’s Guide to Optimizing Your Website

-

Barbara Bartucz

- Conversion

- 6 min read

Table of Contents

Are you an absolute beginner to A/B testing with no clue where to start? Or maybe you’ve tried making changes to your ecommerce site, but the needle just isn’t moving?

Either way, you’re in the right place.

Every ecommerce store owner has been there—pouring time and money into a website that doesn’t convert like you hoped. It’s frustrating, confusing, and, frankly, a bit of a mystery.

But what if you could take the guesswork out of optimizing your site? That’s where A/B testing comes in.

This beginner-friendly guide will walk you through everything you need to know to start using A/B testing to boost your conversion rate.

Let’s dive in and get you on the path to a higher-performing website!

What is A/B testing?

A/B testing, often referred to as split testing, is essentially a controlled experiment on your website. You take two versions of the same web page—let’s call them A and B—and show them to different segments of your audience.

By comparing how each version performs, you can determine which one resonates more with your visitors.

The beauty of A/B testing is that it’s all based on real user behavior, allowing you to make changes that are more likely to lead to better results, whether that’s higher conversion rates, more clicks, or improved engagement.

Why should you run A/B tests?

You might be thinking, “Why not just make changes to my website based on best practices or expert advice?”

While there’s value in that approach, A/B testing offers something even more powerful: actual data from your own users.

Running A/B tests allows you to see what works for your specific audience, making your optimization efforts far more effective. Achieving statistically significant results is crucial in this process, as this ensures that the outcomes of your tests are reliable and not due to random chance.

Instead of relying on hunches, A/B testing allows you to gain clear, actionable insights into what motivates your website visitors to take action.

This not only boosts your website’s performance but also helps you better understand your audience’s preferences so you can deploy marketing strategies that are more personalized and more effective.

Types of A/B testing

A/B testing comes in several flavors, each suited to different scenarios and goals. Understanding these can help you choose the right approach for your needs.

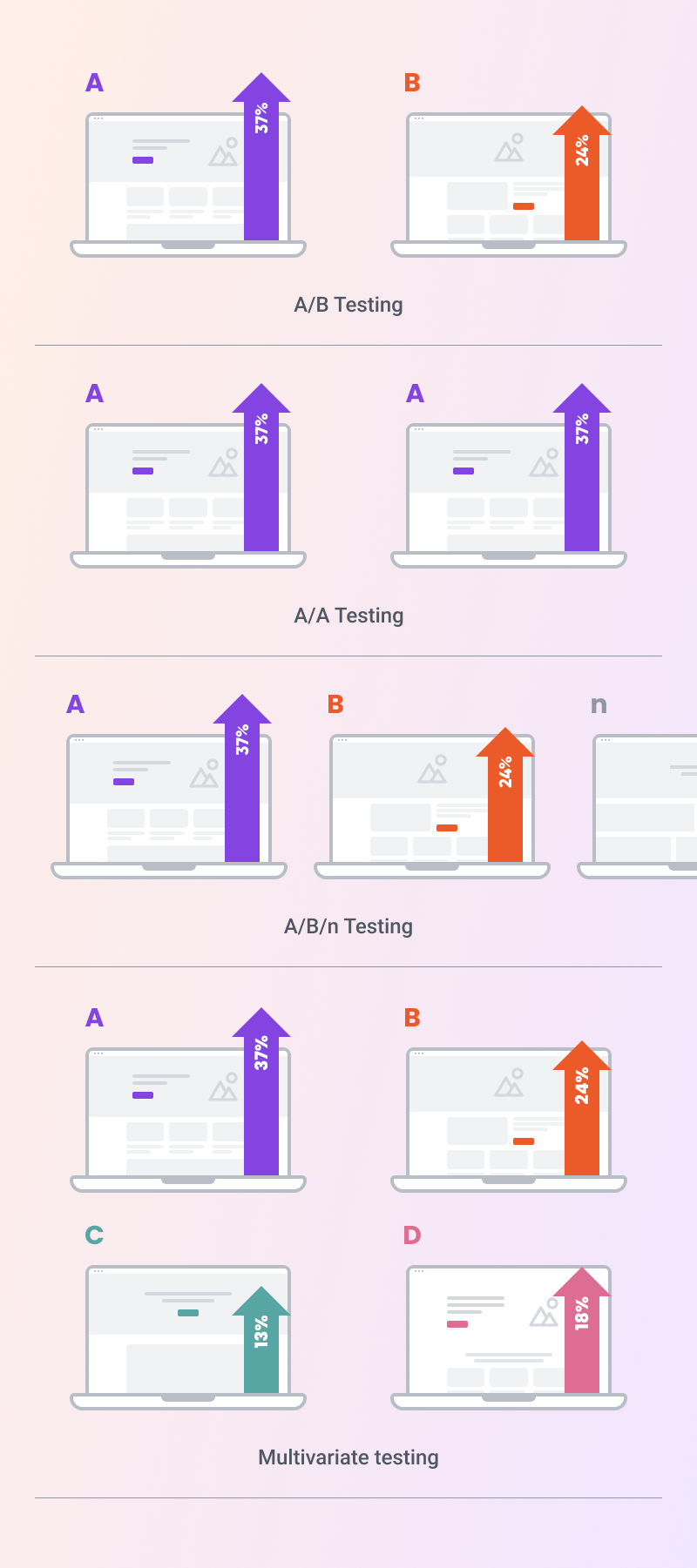

1. A/B testing

This is the classic version of split testing. You pick a single element on your website—like a headline, button, or image—and create two variants of it.

Half of your visitors see version A, and the other half see version B. The version that performs better is your winner. It’s straightforward and perfect for testing simple changes.

2. A/A testing

In A/A testing, you compare two identical versions of a web page to ensure that your A/B testing setup is reliable.

This might seem redundant, but it helps you confirm that any future differences in performance can be confidently attributed to the changes you make, not to random fluctuations or a faulty test setup.

3. A/B/n testing

A/B/n testing is like A/B testing, but on steroids. Instead of just two versions, you can test multiple variations (A, B, C, D, etc.) simultaneously.

This is particularly useful when you have, let’s say, several headline ideas and you want to see which one stands out.

It allows for more nuanced insights, especially when testing complex elements like landing pages or checkout flows.

4. Multivariate testing

Multivariate testing takes things a step further. Instead of testing just one variable (e.g. your headline), it allows you to conduct a test that evaluates multiple elements at once to see how they interact with each other.

For example, you might test different combinations of headlines, images, and call-to-action buttons on a single landing page.

This approach is more complex and typically requires a larger audience, but it can provide deep insights into how various elements work together to influence user behavior.

Common terms used in A/B testing

As you dive into A/B testing, you’ll quickly realize that it comes with its own set of jargon.

Understanding these terms is crucial because they form the foundation of how you interpret and act on your test results.

Here’s a quick glossary to get you up to speed:

1. Split testing

This is simply another name for A/B testing. It involves comparing two versions of a webpage or element to see which one performs better. The goal is to identify which version drives more conversions or engagement.

2. Control

The control is the original version of your webpage or element. It serves as the baseline against which all variants are tested. Think of it as the “before” in your experiment.

3. Variant

The variant is the new version you’re testing against the control. This could be anything from a different headline to a completely redesigned landing page.

The variant is the “after” that you hope will outperform the control.

4. Statistically significant results

These are the results that give you confidence that the differences you’re seeing between the control and the variation aren’t due to random chance and produce statistically significant results.

If you achieve statistically significant results, it tells you that the changes you made likely had a real impact, and you can trust the results enough to make a decision based on them.

5. Conversion rate

This is one of the most important metrics in A/B testing.

It’s the percentage of visitors who take the desired action, whether that’s making a purchase, signing up for a newsletter, or clicking a button. Your goal is to improve this rate through your tests.

Recommended reading: A/B Testing Terminology: A Glossary for Marketers

How to set up an A/B test?

Setting up an A/B test might sound intimidating, but it’s actually quite manageable if you follow a clear process.

Here’s how to get started.

Step 1: Determine key metrics

Before you begin, decide on the key metrics you want to improve. This could be anything from click-through rates on a button to the number of sign-ups for your newsletter.

Knowing what you want to achieve will guide your test design and help you measure success accurately.

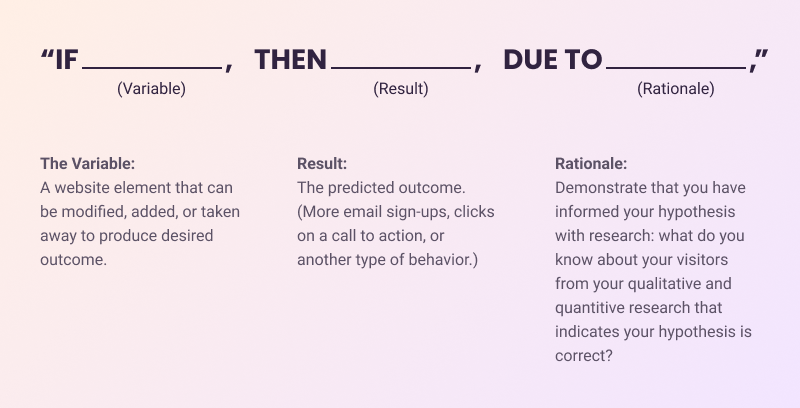

Step 2: Formulate hypotheses

Next, develop a hypothesis based on your understanding of user behavior.

A hypothesis in A/B testing is an educated guess about what changes might lead to improved performance. For example, you might hypothesize that “Changing the color of the call-to-action button to red will increase click-through rates.” This hypothesis will form the basis of your A/B test.

The more specific your hypothesis, the easier it will be to set up a meaningful split test.

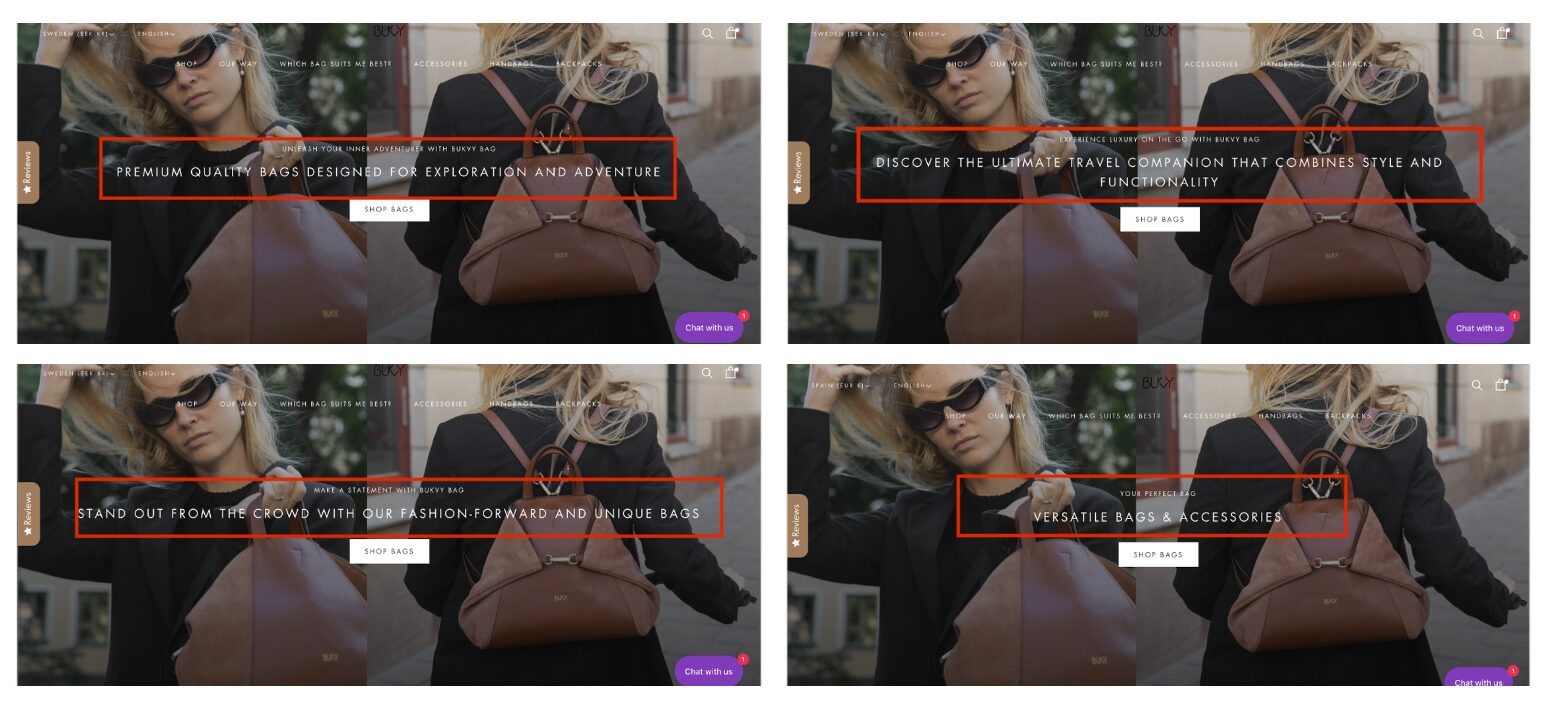

Step 3: Choose elements to test

With a solid hypothesis in hand, it’s time to select the elements on your web page that you want to test.

These could be headlines, images, buttons, forms, or any other component that impacts user interaction.

For instance, Bukvybag tested 4 different variants for their headline. Finding the winning one led to to a 45% increase in orders, which clearly shows the power of changes—even small ones.

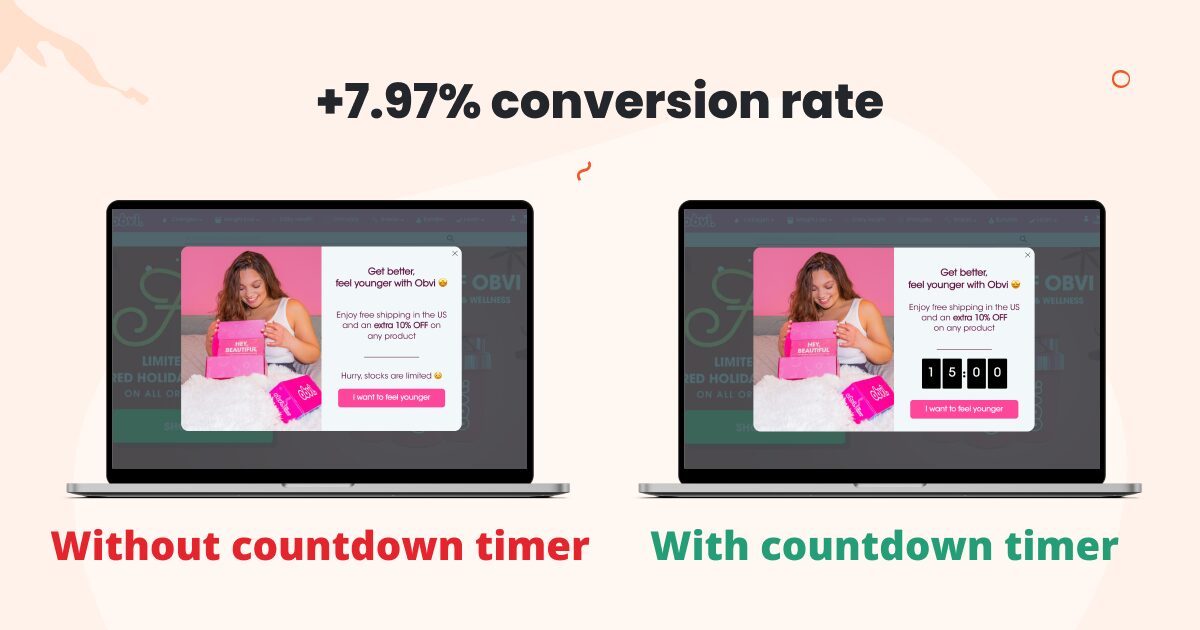

Here’s another example from Obvi. They A/B tested their popup form with and without a countdown timer:

The version with the countdown timer achieved a 7.97% increase in conversions, which means that A/B testing this element paid off!

Recommended reading: 6 Examples of AB Testing From Real Businesses

Step 4: Set up your A/B test

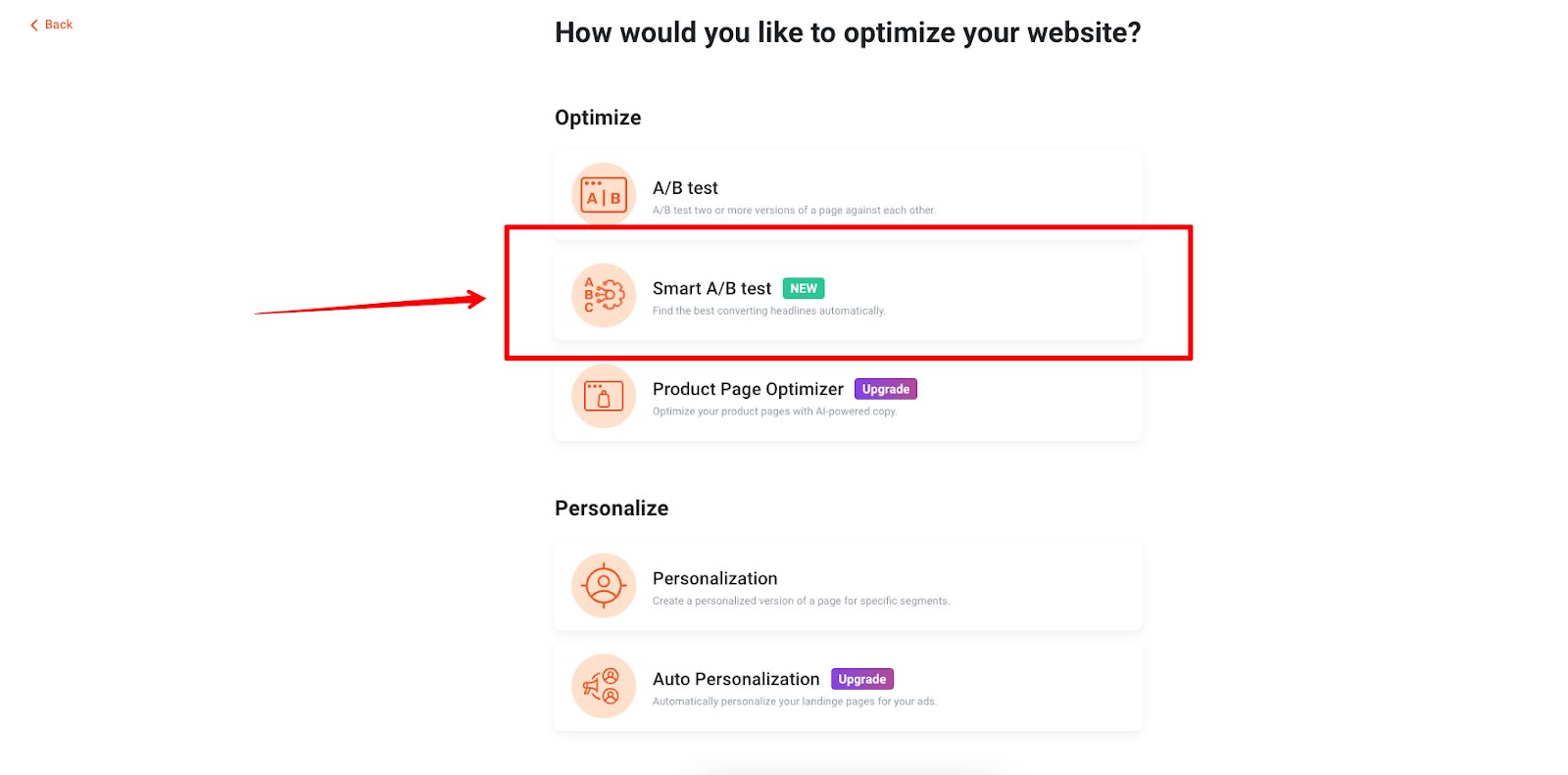

Now comes the fun part—setting up your A/B test. Tools like OptiMonk are a big help at this stage.

OptiMonk simplifies the process of creating variants, segmenting your audience, and tracking results with precision.

It also streamlines the entire testing workflow, making it easy to manage and analyze your experiments.

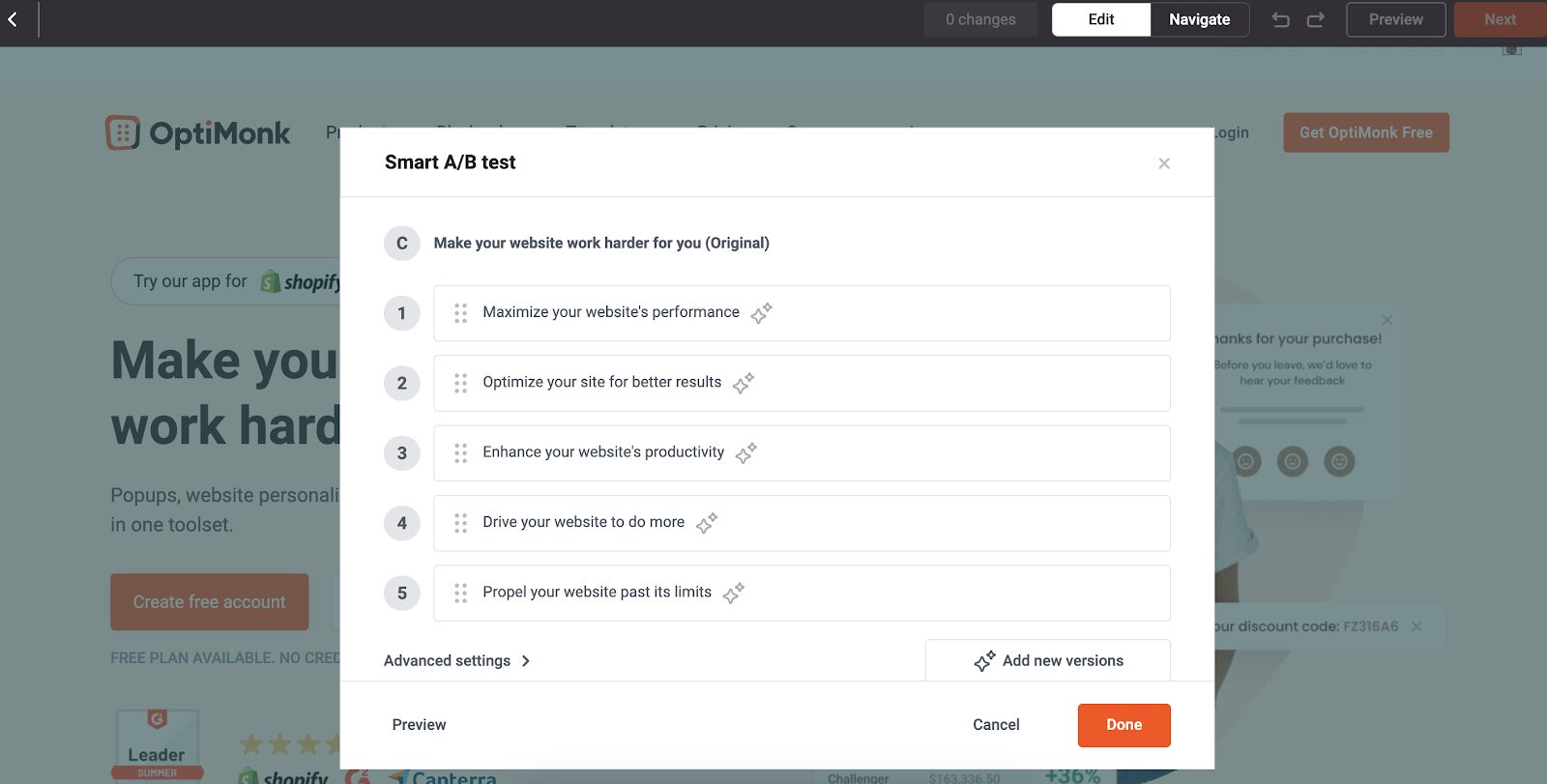

OptiMonk goes a step further with its AI-powered Smart A/B test feature. This tool can automatically generate alternative headlines for you to test, saving you time and effort.

You can easily test these AI-suggested headlines against your original one and tweak or remove any variants to fit your specific needs.

This feature makes it even easier to optimize your content and drive better results without needing extensive manual input.

Learn more about how to set it up here.

Step 5: Launch your A/B test

After setting everything up, it’s time to launch your A/B test. Once your test is live, give it enough time to run so that you can collect a meaningful amount of data.

How long should your test run? That depends on how much traffic your website receives, as less traffic means you’ll need a longer test duration to achieve statistically significant results.

The key here is patience—allow the test to run long enough to ensure that the results are reliable and not just due to random fluctuations.

Step 6: Analyze test results

When your A/B test concludes, dive into the test results to identify which variant outperformed the other. Use statistical analysis to determine if the difference in performance is significant.

If the data confirms that one variant is clearly better, implement the winning version on your site to start reaping the benefits.

When to use A/B testing?

A/B testing isn’t just a tool for occasional use—it’s a critical component of any ongoing optimization strategy. But when exactly should you use it?

Knowing the right moments to deploy A/B testing can make a significant difference in your results.

1. Launching new campaigns or features

Whenever you’re rolling out a new marketing campaign, website feature, or product, A/B testing should be part of your launch strategy.

For instance, if you’re introducing a new email campaign, you could test different email subject lines, send times, or even content formats to see what resonates best with your audience.

This allows you to refine your approach right from the start, ensuring that your new initiative has the maximum impact.

2. Optimizing existing pages or elements

If you have high-traffic pages or key elements on your site (like your homepage, product pages, or checkout process) these are prime candidates for A/B testing.

Regularly testing and tweaking these critical areas can lead to significant improvements in conversion rates.

For example, testing different CTAs, button colors, or product descriptions can help you discover small changes that drive big results.

3. Addressing performance declines

Noticing a drop in key performance metrics like conversion rates, click-through rates, or user engagement? A/B testing can help you diagnose the issue.

By testing different versions of the underperforming element, you can identify what’s causing the decline and implement a fix based on actual data rather than guesswork.

4. Continuous improvement

A/B testing isn’t a one-and-done activity. It’s an ongoing process that should be integrated into your regular marketing and optimization efforts.

By continuously testing and iterating your website or campaigns, you can adapt to changing user behaviors and market conditions, ensuring that your strategy stays effective over time.

5. Personalization and segmentation

A/B testing is also essential when you’re looking to personalize the user experience or segment your audience.

Testing different personalized content or targeted offers can help you fine-tune your approach for different audience segments, leading to higher engagement and satisfaction.

By understanding when to use A/B testing, you can strategically apply it to various aspects of your business, ensuring that you’re always making data-driven decisions that lead to better outcomes.

Common pitfalls in A/B testing

Before diving into A/B testing, it’s important to understand that the process isn’t without its challenges.

Many marketers stumble into common pitfalls that can compromise their split testing efforts and lead to inaccurate or misleading results.

By being aware of these potential traps, you can avoid them and ensure that your tests are both accurate and actionable.

1. Drawing premature conclusions

One of the most common mistakes is stopping a test too early. It’s tempting to declare a winner as soon as you see positive results, but this can lead to false positives.

A/B tests need to run long enough to gather sufficient data. This will ensure that the results are statistically significant and not just the result of random variation.

A good rule of thumb is to continue the test until you’ve reached a confidence level of at least 95% and have gathered a large enough sample size.

2. Not accounting for seasonality

Seasonality and external factors can have a significant impact on your test results. For example, a test run during the holiday season might show different results than one run during a slower time of year.

Failing to account for these variations can lead to incorrect conclusions.

To mitigate this, consider the timing of your tests and, if necessary, run tests across different periods to ensure the results are consistent.

3. Testing too many variables at once

While it’s tempting to test multiple elements at once, doing so can make it difficult to determine which change is driving the results.

This is especially true in multivariate testing, where several changes are tested simultaneously.

To avoid this, start with simpler A/B tests focusing on one variable at a time. This way, you can isolate the impact of each change and avoid confusing or inconclusive results.

4. Ignoring audience segmentation

Not all audience segments behave the same way, and failing to segment your audience can skew your results. For example, new visitors might respond differently to changes than returning customers.

By segmenting your audience and analyzing results for each segment separately, you can gain deeper insights and make more tailored optimizations.

5. Overlooking technical issues

Technical glitches, such as slow page load times or broken elements on one of the test variations, can significantly affect the outcome of your test.

Before launching your A/B test, ensure that both versions are fully functional and provide a seamless user experience.

Regularly monitor your test for any technical issues that could distort the results.

6. Failing to document and learn

Each A/B test provides valuable insights, even if the results aren’t what you expected. Documenting your process, results, and any insights gained is crucial for future reference.

This practice not only helps you refine your testing strategy but also ensures that you’re continuously learning and improving your optimization efforts.

FAQ

How long should I run an A/B test?

Typically, you should run your A/B test for at least a few weeks to gather enough data for accurate results. The duration depends on factors like your website traffic and the specific changes you’re testing. Running the test too briefly can lead to inaccurate conclusions due to insufficient data.

Aim for a period that allows for a significant number of user interactions, ensuring that the results reflect genuine user behavior rather than random fluctuations.

Can I perform multivariate testing?

It’s generally best to test one element at a time to isolate its impact and understand which specific change drives the results. However, if you have advanced testing tools and a sufficient amount of traffic, you can conduct multivariate testing.

Multivariate testing allows you to test multiple elements simultaneously by showing different combinations of variants to users. This method can provide insights into how different changes interact with each other, but it requires a larger sample size and more complex analysis.

What if my test results are inconclusive?

If your split testing results are inconclusive, it’s essential to review your hypothesis, sample size, and the duration of your test.

An inconclusive result can occur if the test was not run long enough, if the sample size was too small, or if the changes tested were too subtle to make a significant difference. Here’s what you can do:

- Review your hypothesis: Ensure your hypothesis is clear and based on a solid rationale.

- Check your sample size: Make sure you have enough participants to achieve statistical significance.

- Evaluate test duration: Confirm that the test ran for a sufficient period to capture meaningful data.

- Refine and retest: Use the insights gained to adjust your hypothesis and design a new test. Even inconclusive results provide valuable information that can guide your next steps.

By carefully analyzing these factors, you can refine your approach and design more effective tests in the future.

Wrapping up

A/B testing is a powerful tool for improving your Shopify store’s performance.

By setting clear goals, selecting the right tools in the Shopify App Store, and following best practices, you can use A/B testing to make data-driven decisions that boost conversions and enhance the user experience.

Ready to get started? Dive into A/B testing and watch your Shopify store thrive!

For more tips and insights on A/B testing, check out our video here:

Migration has never been easier

We made switching a no-brainer with our free, white-glove onboarding service so you can get started in the blink of an eye.

What should you do next?

Thanks for reading till the end. Here are 4 ways we can help you grow your business:

Boost conversions with proven use cases

Explore our Use Case Library, filled with actionable personalization examples and step-by-step guides to unlock your website's full potential. Check out Use Case Library

Create a free OptiMonk account

Create a free OptiMonk account and easily get started with popups and conversion rate optimization. Get OptiMonk free

Get advice from a CRO expert

Schedule a personalized discovery call with one of our experts to explore how OptiMonk can help you grow your business. Book a demo

Join our weekly newsletter

Real CRO insights & marketing tips. No fluff. Straight to your inbox. Subscribe now

Barbara Bartucz

- Posted in

- Conversion

Partner with us

- © OptiMonk. All rights reserved!

- Terms of Use

- Privacy Policy

- Cookie Policy

Product updates: January Release 2025