- Blog

- How to Analyze A/B Test Results: A Beginner’s Guide for 2026

How to Analyze A/B Test Results: A Beginner’s Guide for 2026

Table of Contents

A/B testing is a core part of running an efficient, high-performing ecommerce website.

Pitting multiple versions of a page or campaign against one another helps you consistently identify which variation delivers better results most often, higher conversion rates.

Instead of guessing which copy, image, or layout will convert, you can test real hypotheses and rely on hard data.

In this comprehensive guide, we’ll focus on:

- How to interpret A/B test results

- How to turn those insights into higher conversion rates and revenue

- How to use AI to streamline and automate A/B testing

Let’s get started!

Key takeaways

- A/B testing compares two versions of a page or campaign to identify which one drives more conversions or revenue.

- Reliable A/B tests require an adequate sample size and statistical significance before drawing conclusions.

- Use multiple KPIs, not just conversion rate, to understand the full impact of a variation.

- Audience segmentation reveals deeper insights, especially between mobile/desktop or new/returning visitors.

- External factors and heatmap data matter, and can explain unexpected or misleading results.

- A/B testing is an ongoing CRO process, not a one-off project. Modern AI tools can automate large parts of it.

What is A/B testing?

A/B testing (or split testing) is a method for comparing two versions of a page, message, or design element to determine which performs better.

Visitors are randomly split into two (or more) groups:

- Control group (A) sees the original version.

- Variant group (B) sees the new version.

By tracking how each group behaves—whether they convert, click, stay longer, or buy more—you can identify which version generates the best outcome.

A/B tests are commonly used to evaluate:

- Conversion rate

- Click-through rate (CTR)

- Average order value

- Revenue per visitor

- Bounce rate or time on page

- Email subject lines

A/B testing only works if you have sufficient traffic. Without a decent sample size, your results could be misleading or inconclusive.

A step-by-step guide to conducting A/B testing

Although A/B testing can seem complicated, there’s actually a simple step-by-step process that you can use to generate reliable data about your website’s performance.

Here are the six steps you should follow in your future tests.

Step 1: Analyzing your website

You’ll want to start by conducting a thorough assessment of your website’s performance.

By figuring out where most of your potential customers are leaving your sales pipeline, you’ll have a better idea of where to focus your A/B testing efforts to align with your business goals.

For example, if you’re running effective paid ads on Google or social media platforms but find that much of your incoming traffic bounces from your landing page, that’s a great place to start A/B testing.

Analytics tools like Google Analytics can show:

- Which pages have the highest drop-off

- Where paid traffic bounces

- Which steps in the checkout are underperforming

Focus your tests on the pages with the biggest opportunity for improvement.

Step 2: Brainstorming ideas

On the pages that you’ve identified as a priority for testing, take a look at all the elements that might be contributing to your poor results.

Ask the following:

- Is the headline clear and compelling?

- Does the CTA stand out?

- Are product benefits obvious?

- Is the layout confusing?

Create a list of hypotheses backed by data and customer insights.

Use a brainstorming session to come up with creative, data-driven ideas for making changes to your website.

Step 3: Prioritizing ideas

After generating a list of ideas, you’ll want to prioritize them based on their potential impact and their feasibility.

If you have the resources to use the multivariate testing method, you can test a few ideas at once.

If you’re using traditional A/B testing, on the other hand, you should start with the optimization ideas that are the highest on your list and move down from there.

Step 4: Creating challenger variants

You (or your marketing team) will then need to create test variations of the elements that you’re trying to optimize.

The same web page can perform very differently based on small changes to elements like your headline, so create many different versions of whatever you’re testing and then narrow it down to the best two or three options.

Step 5: Running the test

Now it’s time to execute the A/B test by randomly assigning users to the control and challenger variants.

Half of your visitors should see the original version of your page or ad campaign (control page), and the other half should see one of the new variations.

Make sure the test duration is long enough to generate an adequate sample size and to achieve statistical significance. Exactly how long you’ll have to run your tests depends on how much traffic you have arriving on your web page.

Step 6: Analyze AB test results

Finally, you need to analyze the data generated by the A/B test to draw conclusions and make informed decisions based on the results.

We’ll cover the ins and outs of this process in the next sections.

Watch this video to learn more about A/B testing and see a step-by-step guide on how to run A/B tests with OptiMonk:

What is A/B testing analysis?

A/B testing analysis is a process that involves using statistical methods to evaluate data collected from an A/B test to determine the most effective version.

It’s crucial to choose the appropriate statistical tests based on the type of data being analyzed and the specific research question being addressed to ensure the accuracy and reliability of the results.

The importance of A/B testing analysis lies in its ability to help organizations determine the effectiveness of their optimization efforts.

Through thorough analysis of A/B test results, you can understand whether the alterations made, such as modifications to call-to-action buttons or website content, have had the desired impact on key metrics.

3 types of A/B testing analysis

There are three levels to analyzing A/B test results, starting with basic analysis.

1. Basic analysis

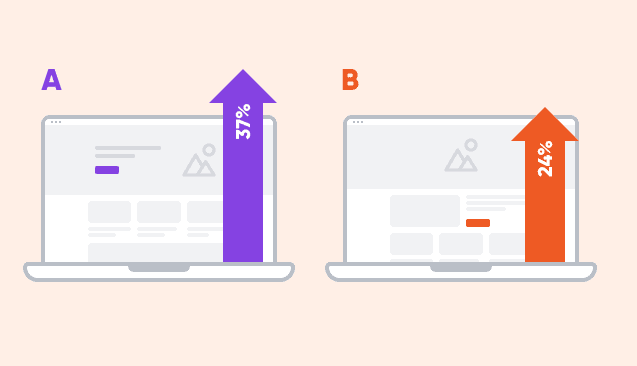

Basic A/B testing analysis has two goals: first, to assure that your test has generated statistically significant results, and second, to establish which version is the winning variation.

Statistical significance measures the probability that the result you’ve observed is not merely due to chance, but rather represents a significant difference between the two versions you’re testing.

A low significance level might occur if only a few visitors have seen the different variations of your site, making it more likely that the outcome is random and not a valid reflection of any differences between the two versions.

You’ll need to have an adequate sample size in order to get accurate results, and then you’ll be able to discover whether you’ve managed to achieve statistically significant results.

You should compare the performance of the control version to the performance of the challenger version. The winning variation will have performed better on the KPI that you’re concentrating on (usually conversion rate).

2. Secondary metrics analysis

Often, it’s a good idea to analyze your results using multiple primary success metrics rather than relying on just one KPI.

You might find that one of your losing variations has a slightly lower conversion rate but outperforms the other metrics, like time spent on site and revenue generated.

Continuing to test until you find a version of your webpage that performs well across all the primary success metrics you care about is wise. After all, consumer behavior is complex, so understanding it fully requires more than a single number.

3. Audience breakdown analysis

Finally, you can get deeper insights into visitor behavior by segmenting your audience based on demographics, behavior, or other factors. This can help you understand how your subgroups respond differently to the different variations.

For example, it’s usually a good idea to compare test results for mobile versus desktop users, since mobile users often respond very differently to design choices.

Once again, you need to be careful to pay attention to the statistical confidence level in each of these test results.

You might have achieved a high enough sample size for your basic analysis to be statistically significant, but this doesn’t mean your sample size of mobile visitors is large enough to draw conclusions at a high level of confidence.

Failing to take these factors into account can lead to false positives and mistakes.

How to analyze your A/B testing results?

As you go over your A/B test results, you want to proceed from the more basic types of analysis to more complicated ones.

Here’s an easy-to-follow procedure you can use:

1. Check for statistical significance and winning variant

The first thing you’ll want to do is perform statistical tests to see whether your A/B test achieved a large enough sample size.

The sample size is a crucial factor in A/B testing analysis. It plays a significant role in the reliability and accuracy of the experiment results.

A sample size that is too small can lead to inconclusive findings, making it challenging to draw valid conclusions. On the contrary, a large sample size can highlight minor differences as statistically significant, which may not be practically relevant.

Luckily, most A/B testing tools will automatically perform this analysis, so all you have to do is check out your dashboard.

Next, you can confirm which variation is the winner (i.e., which variation performed best in terms of your primary metric).

This gives you a leading candidate for the version you’ll eventually roll out to all your site visitors at an early stage of the analysis.

2. Compare your test results across multiple KPIs

Next, you should focus on analyzing results across various measures of customer behavior.

Although comparing conversion rates is essential, especially since they’re usually the KPI used as the primary metric for A/B testing, it’s not enough on its own.

You should consider other factors in order to gain a well-rounded understanding of your web pages and marketing campaigns, including your click-through rate, time spent on page, and revenue generated.

When deciding on the metrics for an A/B test, it’s important to align them with your specific goals and objectives for the test.

The chosen metrics should be relevant to the test, measurable, and directly linked to the goals you have set for the experiment.

By selecting metrics that are meaningful and aligned with your desired outcomes, you can effectively evaluate the success of your A/B test and make informed decisions based on the results.

3. Segment your audience for further insights

Segmentation is a powerful tool for understanding how different groups of users respond to your variations.

Once you’ve seen how your audience as a whole interacts with the different variations you’ve created, you can break your results down further.

For example, you might examine whether first-time visitors respond to a certain variation in a very different way than your returning visitors.

These types of insights can help you personalize your website for different users’ preferences in the future.

4. Analyze external and internal factors

Now that you’ve analyzed the data you’ve collected from an A/B test, you also need to consider external variables that might have affected your results.

For instance, if you run an A/B test during the busy holiday season or right after one of your competitors goes out of business, your results might not be an accurate representation of what you’d see at a different time of the year.

While it’s impossible to control everything, you should try to ensure your test succeeds in creating results that you can generalize.

If you think that your A/B testing results have been seriously affected by external factors, it might be a good idea to repeat your test to see if you get comparable results.

5. Review click and heatmaps

Click and heatmaps provide visual representations of how users interact with a web page.

You can gain valuable insights by looking at how users navigate your page. One variation might lead to very different user behavior than another one, and this might affect your desired outcome in a way that doesn’t show up in the quantitative data.

If your A/B testing tool doesn’t automatically include a heat mapping or session recording feature, you can use a dedicated tool like HotJar.

6. Take action based on your results

At the end of this process, you’ll know whether the new variations you tried out as part of your A/B test have gotten the results you’re looking for.

If you haven’t found that there’s a significant difference between the variations you tested, that’s perfectly fine because there’s nothing wrong with testing out a wrong hypothesis.

That’s exactly what testing is for! In that case, you might decide to stick with your original design or continue iterating to find something that improves it.

On the other hand, if you’ve found a new variation that crushes what you were doing before, you’ll probably want to pull the trigger on rolling it out for your entire audience. Congrats!

There are so many different outcomes at this stage that we can’t really cover them all. For instance, if you have a variant with a high conversion rate but a mediocre revenue-per-click, you might want to keep testing, but that’s always a tough decision.

The best advice is to consider A/B testing as part of a broader, continuous process of conversion rate optimization and keep refining your website indefinitely.

3 common mistakes during A/B testing

A/B testing can be a goldmine for insights—if you avoid the usual pitfalls. Here are the most common mistakes marketers make:

- Testing too many variables at once: This muddies your data and makes it nearly impossible to know what actually drove the change. Test one thing at a time to keep results clear and actionable.

- Not running the test long enough: Ending your test before it reaches statistical significance can lead to false positives (aka celebrating too early). Give your test enough time to gather reliable data.

- Skipping audience segmentation: Assuming one version fits all is risky. Different segments respond differently; what works for new users might fail for returning ones. Always break down results by audience type.

And here is a bonus tip: start with a clear hypothesis. A strong hypothesis guides your test and makes your results more meaningful. Without one, you’re just guessing with prettier graphs.

Automate your A/B testing process with AI

As you’ve seen, A/B testing can be a complex process that requires time and effort, not to mention all the CRO knowledge you’ll need.

That’s why small brands and marketers have struggled to experience the full benefits of A/B testing—until now.

OptiMonk’s Smart A/B Testing tool allows you to fully automate your A/B testing process: it creates variants for you, runs the tests, and analyzes the results without your involvement.

You simply choose the elements you want to optimize on your landing pages, and then the AI works its magic!

With Smart A/B Testing, you can say goodbye to manual work and embrace data-driven A/B testing.

Wanna give it a try? Click here.

FAQ

How does A/B testing help increase conversion rates?

A/B testing boosts conversion rates by showing which version of your landing page or message leads to more sign-ups, clicks, or purchases. Instead of guessing, you make decisions backed by real user data.

What’s the difference between A/B testing and Split URL testing?

While both test different versions of a web experience, Split URL testing sends website traffic to completely different URLs. A/B testing usually compares elements on the same URL, while Split URL testing compares entirely separate pages (like yourdomain.com/page-a vs yourdomain.com/page-b).

Can A/B testing reduce my bounce rate?

Yes. Testing different landing page variations can reveal which layouts, messages, and visuals keep website visitors engaged longer, ultimately lowering bounce rate.

What is the difference between A/B testing and multivariate testing?

A/B testing compares two versions of a page, while multivariate testing evaluates multiple elements on a landing page at the same time (e.g., headline + image + CTA). Multivariate tests are more complex, require more traffic, and are ideal when you want to understand how several changes interact to influence conversion rates.

Wrapping up

Whether you’re rolling out a new landing page or trying to refine the subject line of an email marketing campaign, A/B testing is a crucial tool for creating optimized user experiences that lead to higher conversions and sales.

Testing is simply the only way to make decisions in a reliable, data-driven way.

But remember, your A/ B testing is only as good as your analysis of your results. That’s why understanding the significance level of your tests, looking at many different KPIs, and segmenting your audience is essential.

Once you know the ins and outs of this crucial stage of A/B testing, you’ll be able to achieve results you never thought were possible.

If you’d like to start A/B testing but avoid all the hassle, give OptiMonk’s Smart A/B testing tool a try!

Migration has never been easier

We made switching a no-brainer with our free, white-glove onboarding service so you can get started in the blink of an eye.

What should you do next?

Thanks for reading till the end. Here are 4 ways we can help you grow your business:

Boost conversions with proven use cases

Explore our Use Case Library, filled with actionable personalization examples and step-by-step guides to unlock your website's full potential. Check out Use Case Library

Create a free OptiMonk account

Create a free OptiMonk account and easily get started with popups and conversion rate optimization. Get OptiMonk free

Get advice from a CRO expert

Schedule a personalized discovery call with one of our experts to explore how OptiMonk can help you grow your business. Book a demo

Join our weekly newsletter

Real CRO insights & marketing tips. No fluff. Straight to your inbox. Subscribe now

Barbara Bartucz

- Posted in

- Conversion

Partner with us

- © OptiMonk. All rights reserved!

- Terms of Use

- Privacy Policy

- Cookie Policy