- Blog

- How to Conduct A/B Testing in 5 Easy Steps (& 4 Examples)

How to Conduct A/B Testing in 5 Easy Steps (& 4 Examples)

-

Nikolett Lorincz

- Conversion

- 6 min read

Table of Contents

What if we told you there was a way to improve your marketing campaigns, optimize user experiences, and learn more about your customers?

Well, there is.

All you have to do is run A/B tests, and it’s easier than you think.

In this article, we’ll dive into the importance of A/B testing and how you can use this method as part of conversion rate optimization (CRO) to improve conversion rates, measure changes, and boost your bottom line.

But first, let’s cover the basics.

What is A/B testing?

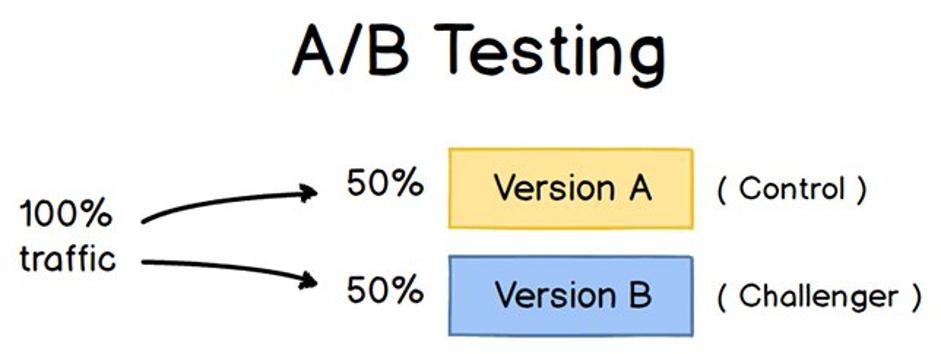

A/B testing, also known as split testing, is when you analyze two different versions of the same thing to see which one performs better. It’s like a scientific experiment for your marketing campaigns.

Imagine you’ve created a new landing page for your website. You like the way it looks, but you’re not sure if it’ll outperform your existing landing page.

Instead of guessing, you can run an A/B test.

Half of the visitors to your site will see Landing Page A, while the other half will see Landing Page B.

After the test, you’ll know which version had the best conversion rate.

Source: HubSpot

A/B tests can be run on almost anything, including:

- Newsletter designs, subject lines, and other email copy

- Call-to-action buttons

- Product descriptions

- Headlines

- Value propositions

- Landing pages

- Popups

- And more

You can also test different elements of the same web page at different time periods to increase testing frequency and track multiple metrics.

The versatility of split testing makes it one of the most valuable assets in your digital marketing toolbox. It lets you see how people respond to changes in your digital content, providing insight into visitor behavior and buying preferences.

Why do you need A/B testing?

Albert Einstein once said, “Failure is success in progress.”

You won’t create the perfect marketing campaign by guessing. You create it through trial and error—learning what doesn’t work and adapting your strategy.

A/B testing helps you improve your understanding of customers’ preferences and shopping habits.

By running A/B tests, you can learn which marketing strategies are likely to boost sales, which strategies to avoid, and how to create delightful customer experiences that influence user behavior.

This not only enhances the performance of your ongoing campaigns but also helps you make better-informed decisions going forward, aligning with a well-defined marketing strategy for long-term growth and improvement.

How to conduct AB testing in 5 easy steps?

There’s a right way and a wrong way to conduct A/B tests.

The right way will help you reach your conversion goals by finely tuning various elements of your website to align with your visitors’ preferences. The wrong way will waste a lot of time, and nobody wants that.

Here are some best practices to help you get the most out of your A/B tests.

1. Start with a strategy

Conducting A/B tests is a lot like following the scientific method. You don’t just randomly change things.

There’s a process to follow:

- Start with a question: “How can I increase newsletter subscriptions?”

- Make a hypothesis: “Adding an exit-intent popup to my landing page will increase subscribers.”

- Conduct the experiment: Run an A/B test on your landing page, with and without the popup.

- Draw your conclusion: “I gained 8.75% more subscribers by using the popup.”

If you don’t have a goal or desired outcome in mind, you’re aimlessly testing things in hopes that something might work out. That’s why it’s important to develop a strategy before you start your A/B testing.

Here’s an example.

Pretend your goal is to increase newsletter subscriptions. The first thing you should do is build your strategy around that goal by thinking about different ways you can increase subscriptions.

You could:

- Add an opt-in form popup to target visitors when they leave your landing page.

- Create a new landing page.

- Change the button color of your existing call to action.

These are excellent things to test. You can run as many A/B tests as you want, but you need to only test one element at a time.

Don’t test your new popup on the new landing page because you won’t know which element visitors are responding to.

However, you can run multiple tests of the same element, known as multivariate testing.

An example of multivariate testing is split testing three spin-the-wheel popup versions, each with a different call-to-action button. You’re running multiple tests, but you’re still analyzing the same element—the spin-the-wheel popup.

2. Lay the groundwork for your A/B test

Every A/B test should have a:

- Control: Your current digital asset. The control could be blog post titles, email subject lines, a call-to-action form, or anything else that can be tested.

- Challenger: The modified version of the control that you want to test.

- Let’s say you have a welcome popup on your blog post, and you want to test the effectiveness of an exit-intent popup. The welcome popup is your control, and the exit-intent popup is your challenger.

Now, it’s time to set your sample size. Who will you send the test to?

If you’re split testing an email campaign, you can simply send the control to half of your subscribers and the challenger to the other half.

But for web elements, you want to make sure you have a large enough sample size. Otherwise, your test won’t give accurate results.

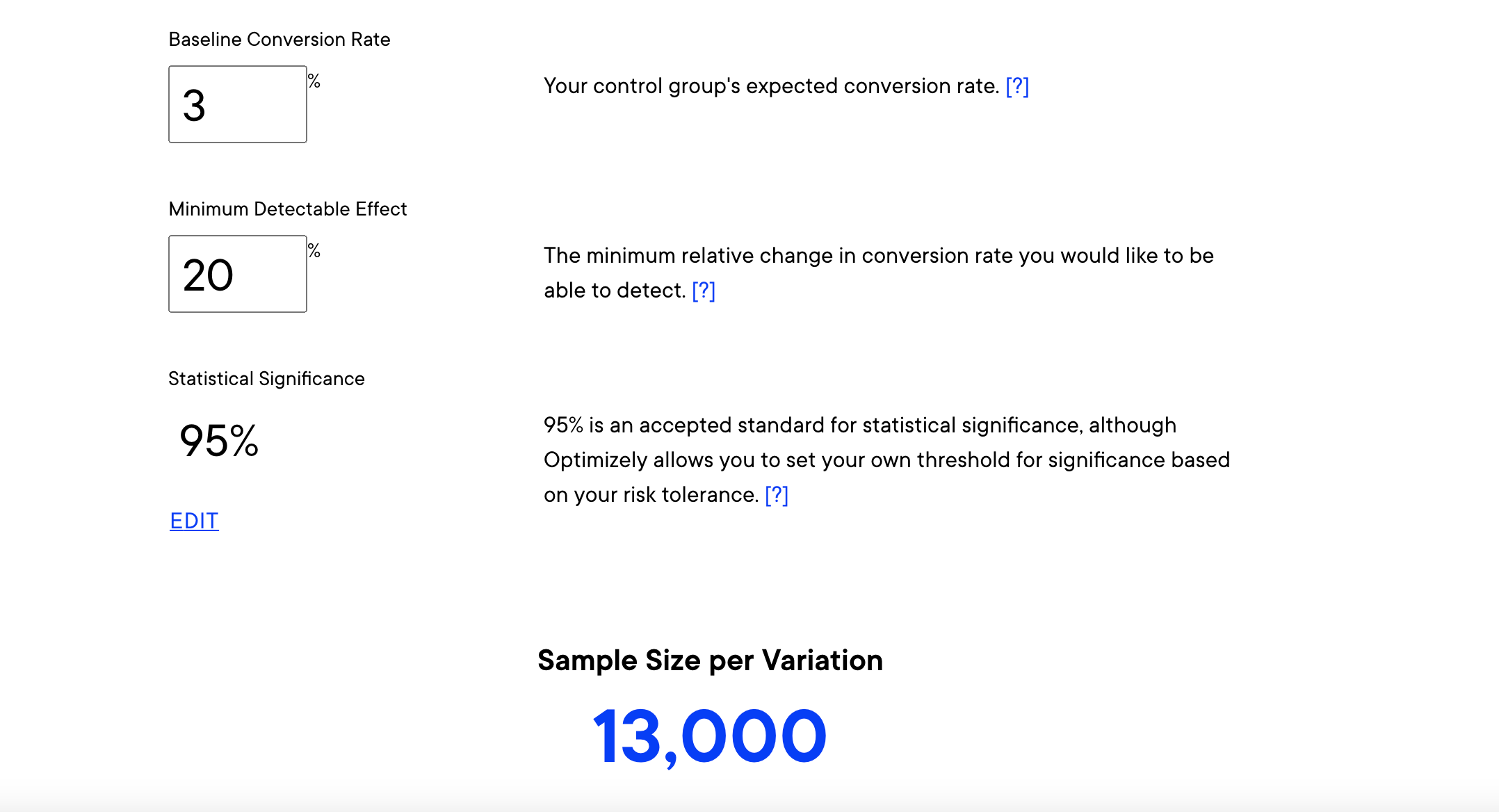

Optimizely has a calculator that helps set your sample size. You’ll enter the conversion rate of your control, determine the improvement you want the test to acknowledge, and choose the statistical significance of your test.

3. Launch your test

You’ll need an A/B testing tool to launch the test and collect the data.

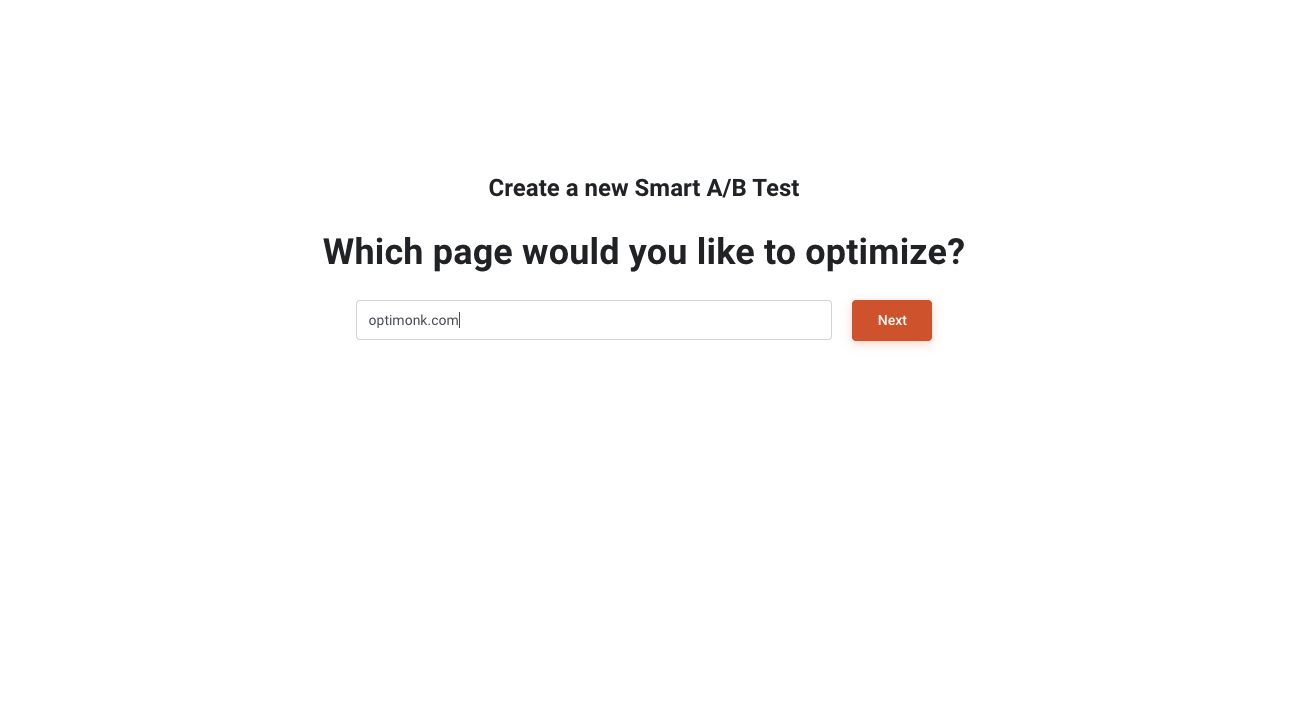

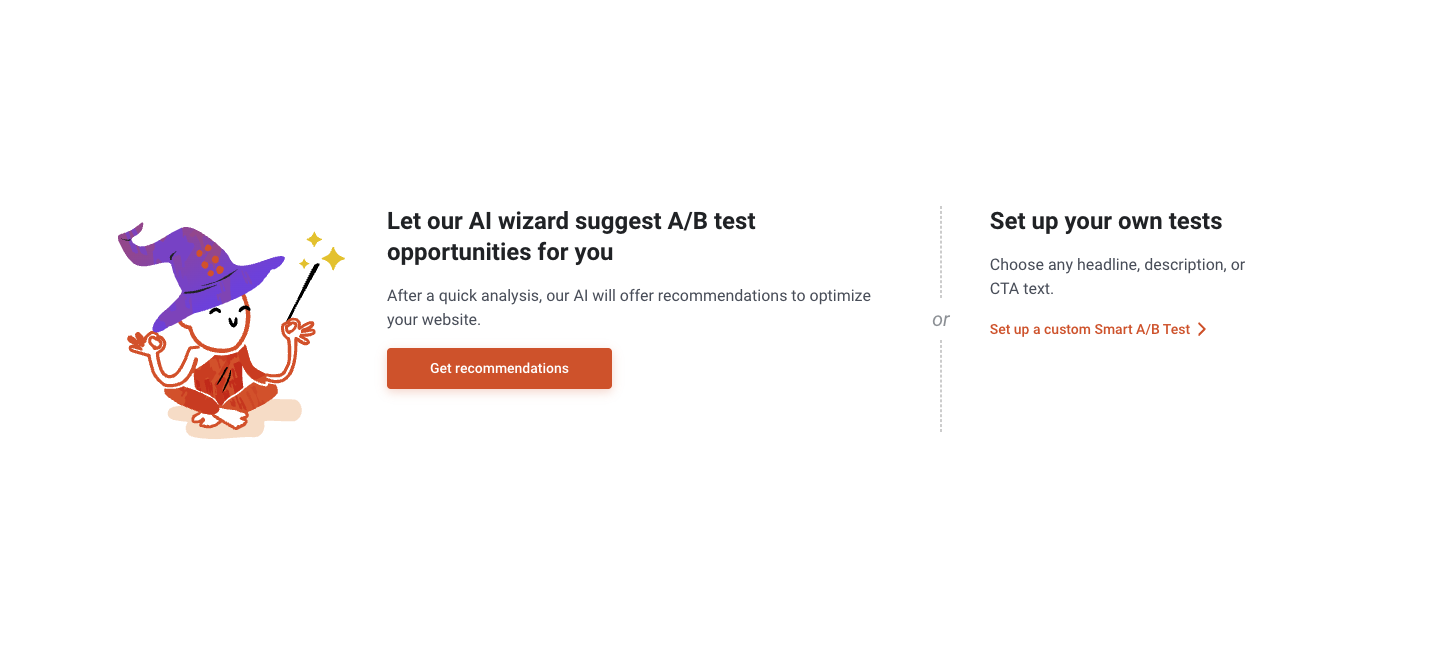

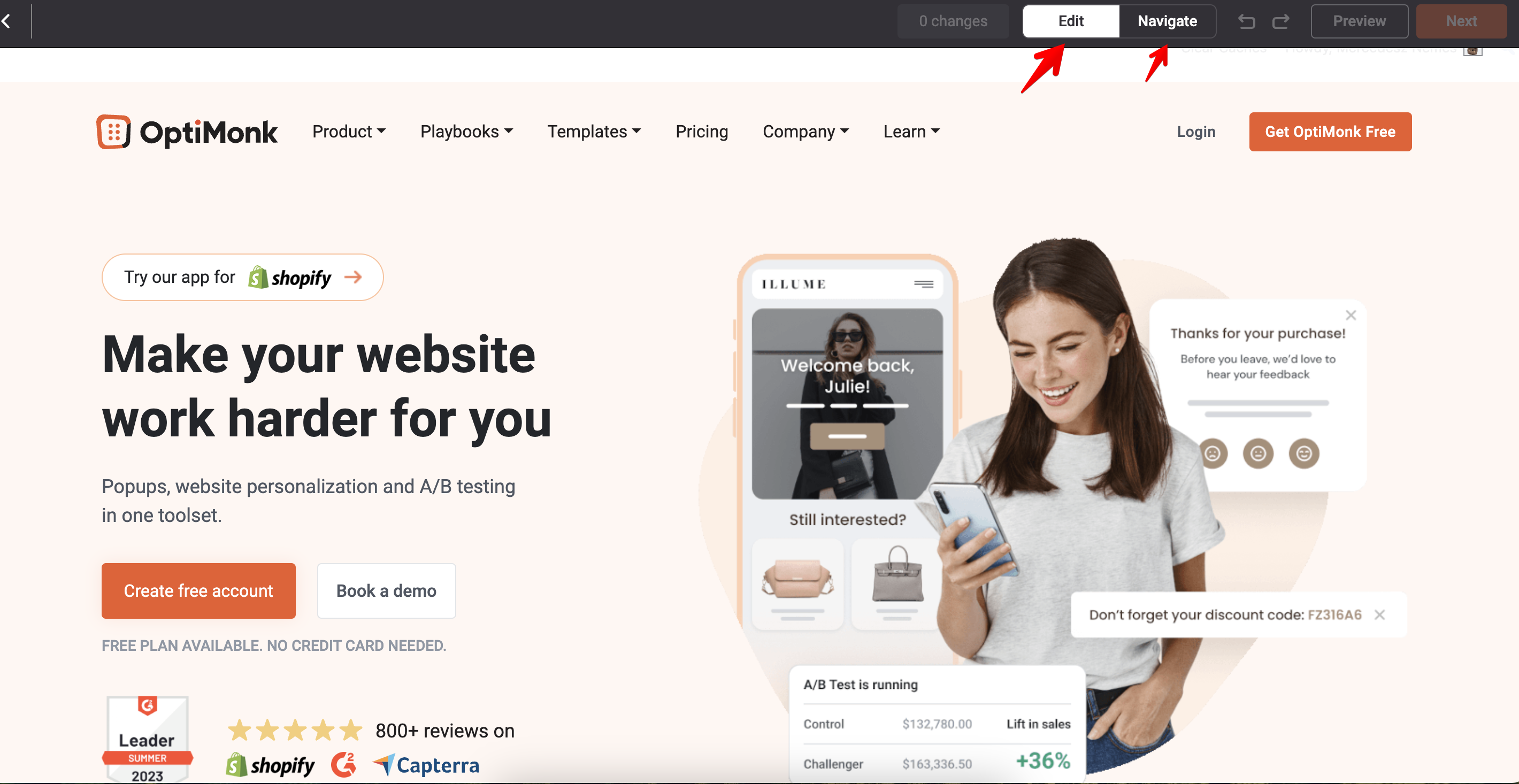

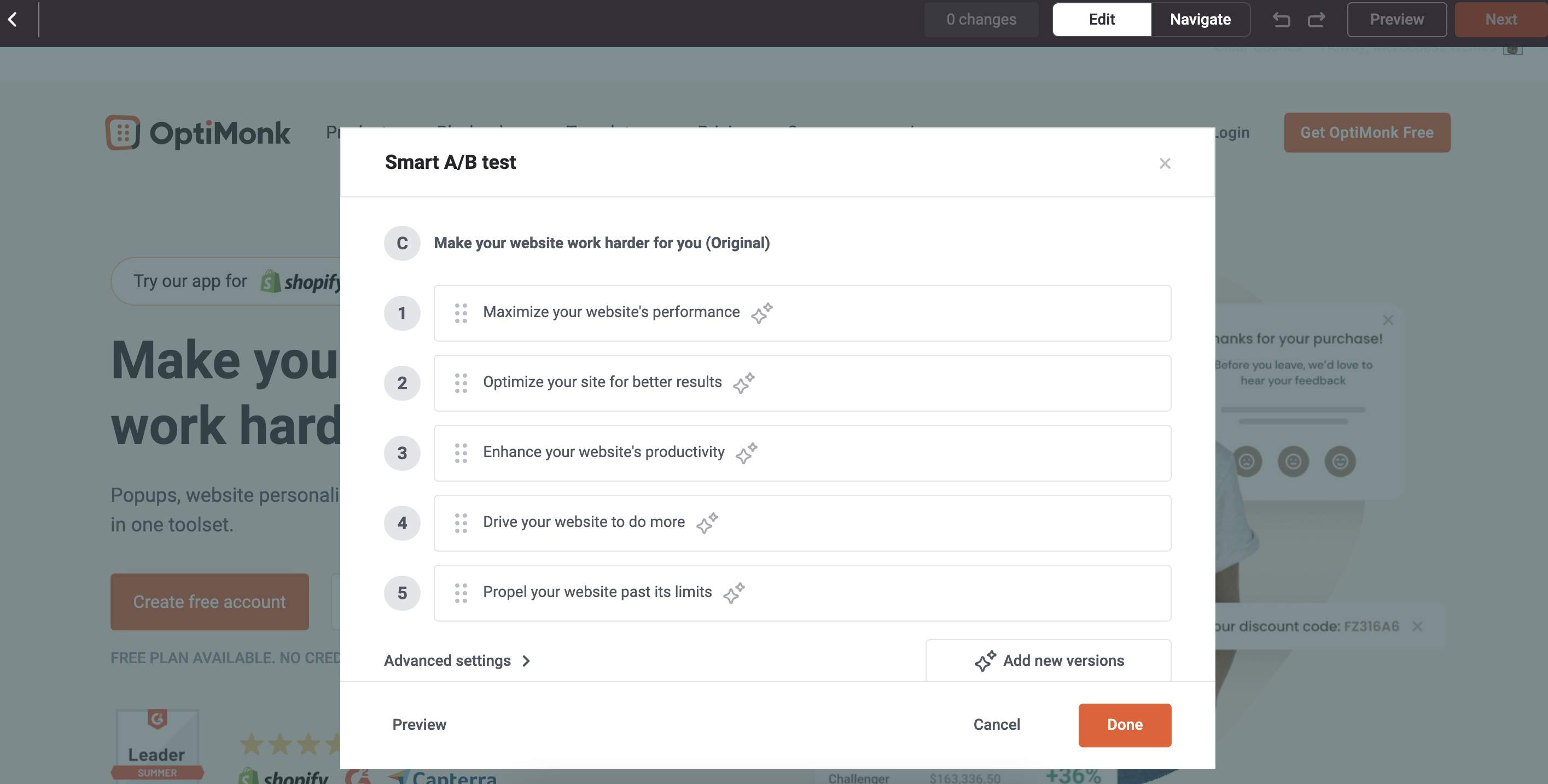

OptiMonk’s Smart A/B Testing tool is a great option, leveraging fully automated, AI-powered A/B tests to help you identify the best-performing text elements on your landing pages.

OptiMonk’s tool automatically cycles through all your text variants one by one, identifying a winner in the initial test and then pitting the next variant against this winner. This process continues until the top-performing option is identified.

OptiMonk’s Smart A/B Tests can optimize various text elements on your landing pages, including:

- Headlines and subheadlines

- CTA button texts

- Product descriptions

Here’s how to set up a Smart A/B Test with OptiMonk:

- Start a new campaign and add your domain: Select or add the domain where you’d like to run your Smart A/B Test.

- Get recommendations or create your own test: OptiMonk can recommend landing page elements for optimization or you can create your own A/B test.

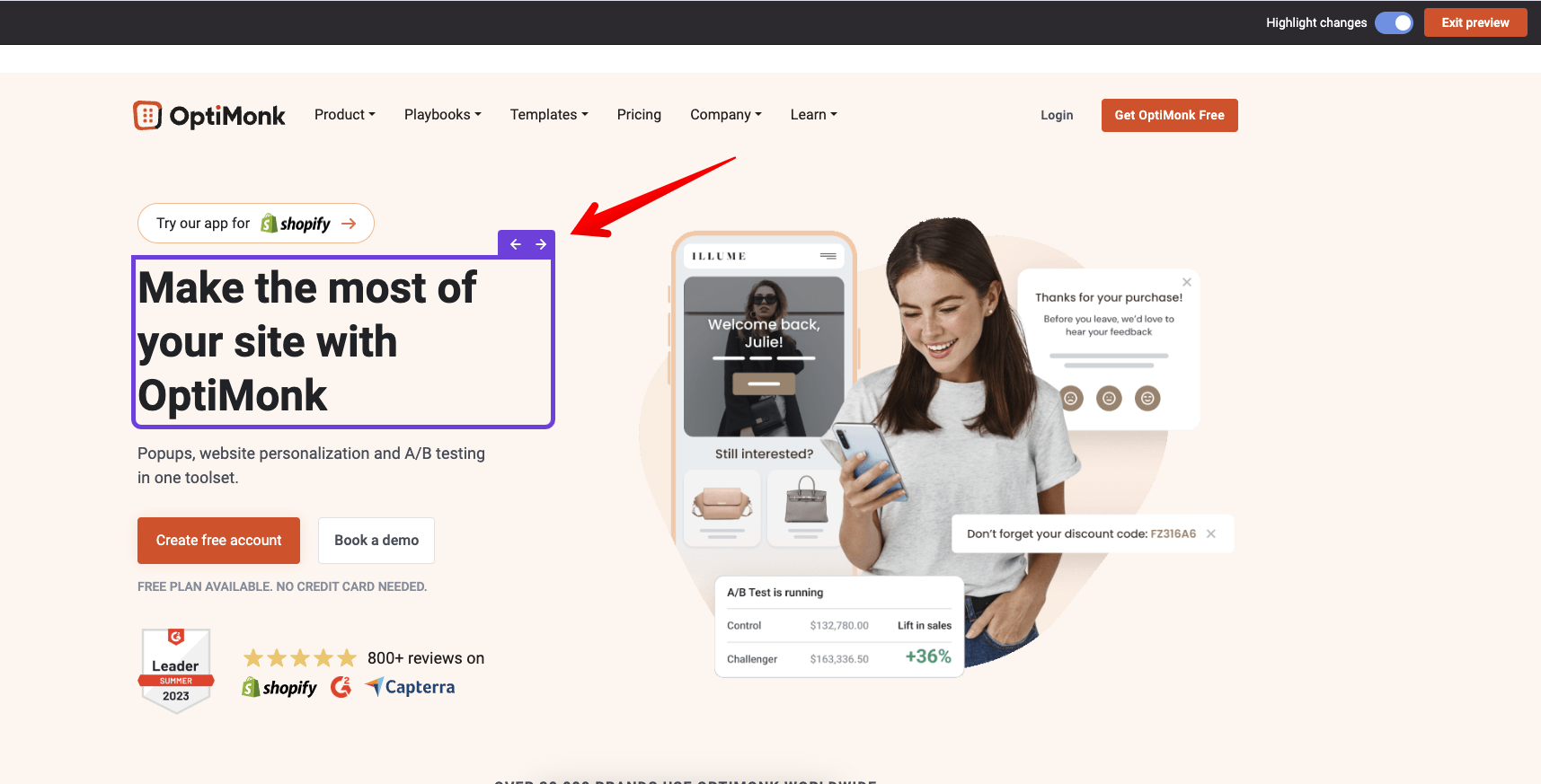

- Launch the Dynamic Content Editor: OptiMonk will launch your website within its Dynamic Content Editor. Navigate to the specific page you wish to test and begin making changes.

- Generate alternative headlines: OptiMonk will automatically generate alternative headlines for you to test against your original headline.

- Preview your variants: Click on Preview to see how your page appears with different headline variants.

Save your variants: After adding your variants and clicking Save, specify the pages where your A/B test should run.

For a more detailed guide, check out our support article.

Companies typically run tests for a few months or a business cycle, but every case is different. Ideally, you want to test until you reach 95% statistical significance.

4. Measure your results

Measuring the test results of your A/B test is a quick and easy process.

Once your tests reach a 95% confidence level, you have enough information to conclude the test. Then, select the variant with the highest conversion rate as your champion.

5. Plan your next test

Don’t think of A/B testing as a one-time thing. Look at it as an ongoing process because customer preferences change over time. Plus, there’s always room for improvement, especially when you’re creating customer-focused content.

Use the insights from current tests to plan and optimize future tests.

Every time you want to redesign your web pages or change the button size on your opt-in form, run an A/B test to see which version visitors prefer.

Evaluate your landing pages on Google Analytics and look at metrics like bounce rate, conversion rate, and time spent on a page. If there’s room for improvement, think of ways to change and test your pages.

4 real-life A/B testing examples

Now that you know how to successfully run an A/B test, do they really work?

Absolutely!

A/B testing is one of the most effective conversion optimization solutions you have. Here are four case studies that prove it.

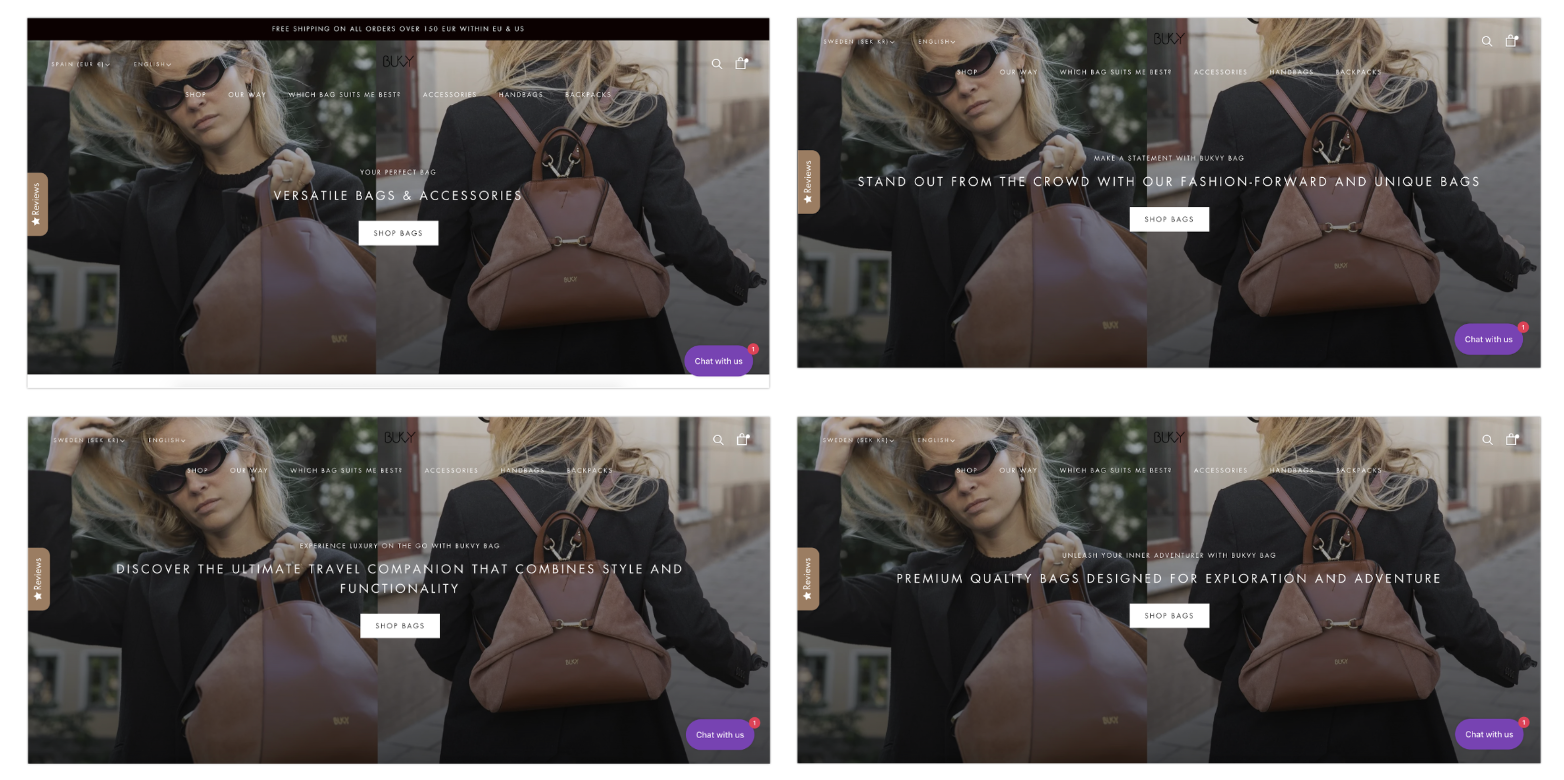

1. Bukvybag’s landing page headline test

Bukvybag was struggling with a low conversion rate on their homepage. They used OptiMonk’s Dynamic Content feature to uncover the headlines that attracted the most customers.

By testing variations of their headline, Bukvybag achieved a 45% upswing in orders thanks to A/B testing.

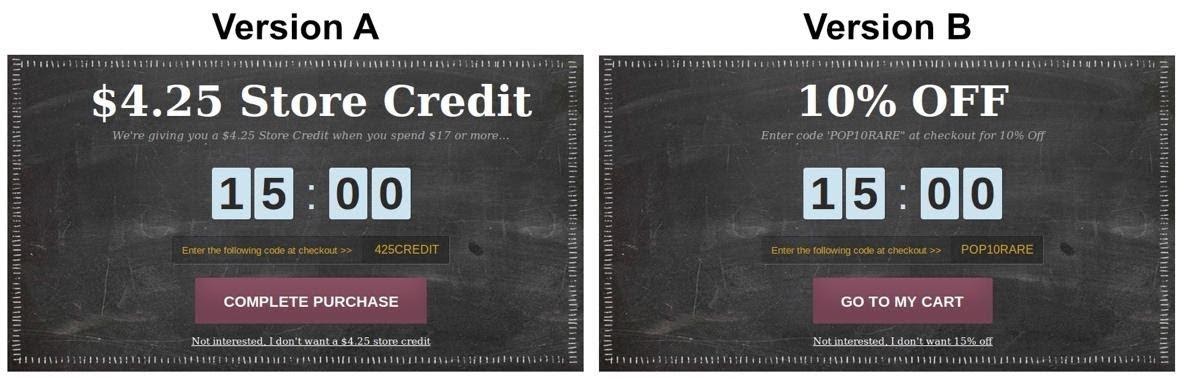

2. Boot Cuffs & Socks’ offer test

Women’s footwear company Boot Cuffs & Socks created two versions of a popup to encourage visitors to complete their purchases.

One offered a $4.25 store credit, and the other gave a 10% discount.

The test revealed that people preferred the 10% discount, leading to a 15% higher conversion rate and a 280% monthly return on investment.

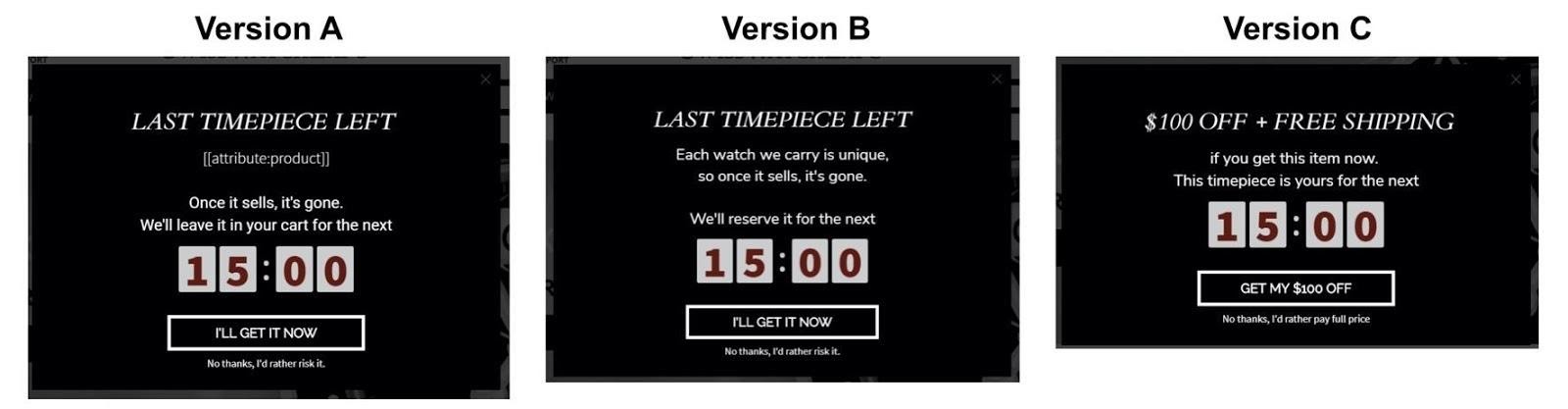

3. SwissWatchExpo’s value proposition test

SwissWatchExpo used OptiMonk to test three popups. The winning variant, which offered a $100 discount and free shipping, achieved a 28% conversion rate.

This successful test led to a 27% increase in online transactions and a 25% increase in revenue within three months.

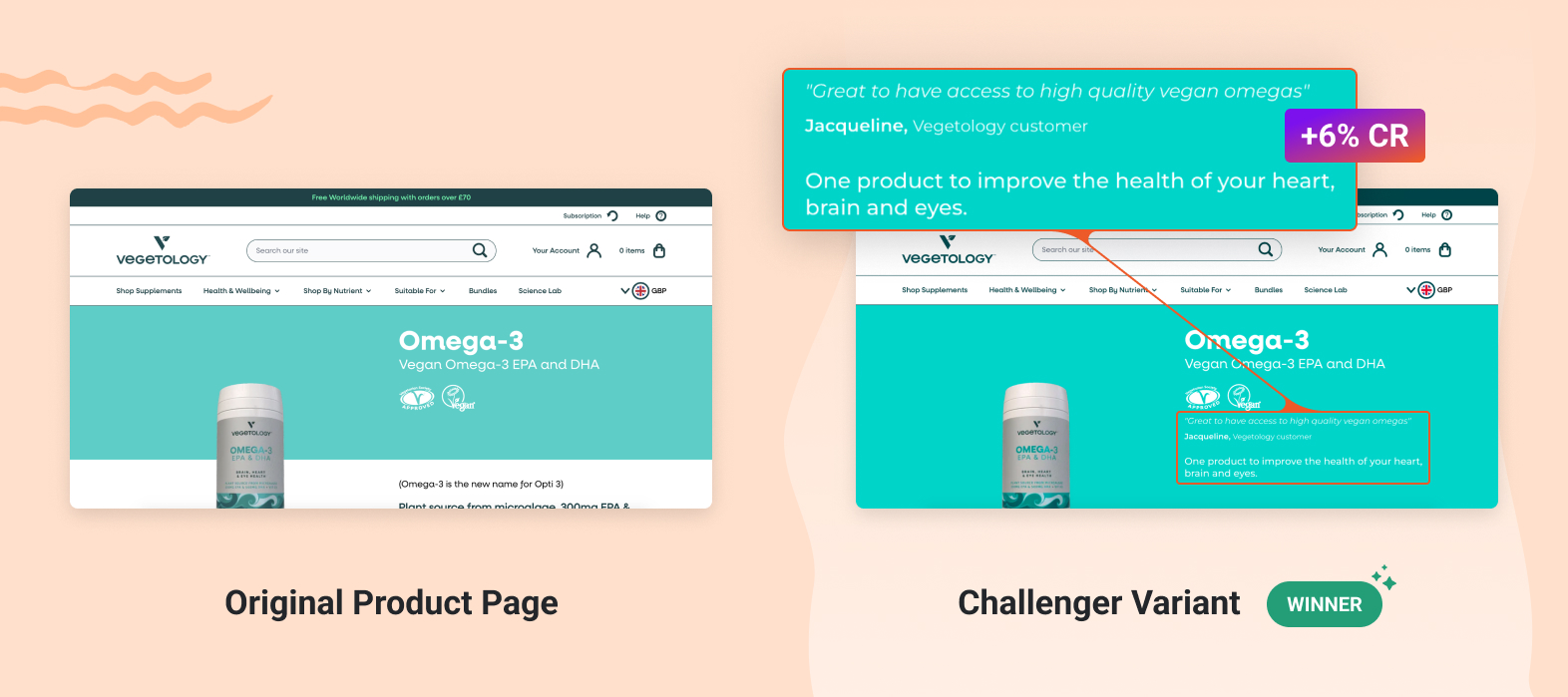

4. Vegetology’s product page test

Vegetology’s products have generated lots of amazing reviews, but they weren’t showing them off effectively. Their testimonials were buried at the bottom of their product pages, where nobody could see them.

So they A/B tested adding social proof to the above-the-fold section. They found that the challenger variant that included the customer testimonials at the top of the page led to a 6% increase in the conversion rate and a 10.3% increase in unique purchases.

FAQ

What is multivariate testing?

Multivariate testing is an advanced form of A/B testing where multiple elements are tested simultaneously to determine the best combination. Instead of testing just one variable, like a headline or a call-to-action button, multivariate testing examines how different combinations of elements interact with each other. This allows marketers to understand which specific changes contribute most to improvements in conversion rates or user engagement.

For example, you might test different headlines, images, and button colors at the same time on a landing page. Multivariate testing helps identify the best-performing combination out of all possible variations.

How to achieve statistically significant results?

To achieve statistically significant results, you need to:

- Ensure a large enough sample size: The more data you collect, the more reliable your results. Use an A/B testing sample size calculator to determine the right number of participants.

- Run the test for an adequate duration: Don’t rush your test. Run it for a period long enough to account for variations in user behavior.

- Focus on one variable at a time: Testing multiple variables simultaneously can muddle results. Stick to one variable per test unless you’re conducting a multivariate test.

- Set clear goals and hypotheses: Know what you’re testing and why. A clear hypothesis helps you measure the impact of your changes accurately.

How to calculate statistical significance?

To calculate statistical significance:

- Determine your control’s conversion Rate: Identify the current performance metric of your control group.

- Set your minimum detectable effect (MDE): Decide the smallest change in conversion rate you want to detect.

- Choose your confidence level: Typically, a 95% confidence level is used.

- Use a statistical significance calculator: Input these values into an online calculator to determine if your results are statistically significant. These calculators consider sample size, conversion rates, and the desired confidence level to give you accurate results.

What are the best A/B testing ideas?

Some effective A/B testing ideas include:

- Headlines and subheadlines: Test different headlines to see which ones grab more attention.

- Call-to-action buttons: Experiment with button colors, text, and placement.

- Landing page layouts: Try different designs and structures to see which one leads to higher engagement.

- Email subject lines: Test variations to increase open rates.

- Product descriptions: Compare detailed versus concise descriptions to see what works better.

- Popup offers: Test timing, design, and offers to see which ones convert more visitors.

What are some key performance indicators you should track when running A/B tests?

When running A/B tests, track these key performance indicators (KPIs):

- Conversion rate: The percentage of visitors who complete the desired action (e.g., making a purchase, signing up for a newsletter).

- Bounce rate: The percentage of visitors who leave the site after viewing only one page.

- Click-through rate (CTR): The ratio of users who click on a specific link to the number of total users who view a page.

- Average time on page: How long visitors stay on your page, indicating engagement.

- Revenue per visitor (RPV): Average revenue generated per visitor.

- Exit rate: The percentage of visitors who leave the site from a specific page.

Wrapping up

A/B testing is an invaluable tool for improving your marketing efforts and optimizing user experiences.

By following these five easy steps—starting with a strategy, laying the groundwork, launching your test, measuring results, and planning your next test—you can make data-driven decisions that enhance your digital strategies.

This method not only helps you understand your customers better but also boosts your bottom line.

Remember, A/B testing isn’t a one-time task but an ongoing process. Customer preferences change, and there’s always room for improvement.

If you wanna get started with A/B testing, create a free OptiMonk account now!

Migration has never been easier

We made switching a no-brainer with our free, white-glove onboarding service so you can get started in the blink of an eye.

What should you do next?

Thanks for reading till the end. Here are 4 ways we can help you grow your business:

Boost conversions with proven use cases

Explore our Use Case Library, filled with actionable personalization examples and step-by-step guides to unlock your website's full potential. Check out Use Case Library

Create a free OptiMonk account

Create a free OptiMonk account and easily get started with popups and conversion rate optimization. Get OptiMonk free

Get advice from a CRO expert

Schedule a personalized discovery call with one of our experts to explore how OptiMonk can help you grow your business. Book a demo

Join our weekly newsletter

Real CRO insights & marketing tips. No fluff. Straight to your inbox. Subscribe now

Nikolett Lorincz

- Posted in

- Conversion

Partner with us

- © OptiMonk. All rights reserved!

- Terms of Use

- Privacy Policy

- Cookie Policy

Product updates: January Release 2025